According to statistics, organizations implementing prompt engineering 2.0 report 40-67% productivity gains while reducing error rates by 30-50% compared to basic text prompting. This is because early AI models depended heavily on how well a user could craft an instruction. The output’s quality was determined by the word choice and order.

But as AI systems’ capabilities have grown, so too has the level of complexity required to communicate with them. Prompt Engineering 2.0 can be useful in this situation. By emphasizing context awareness, this generation of prompting allows AI systems to dynamically adjust to the user’s purpose and past data.

In this guide, we’ll explore what Prompt Engineering 2.0 is, how context aware AI systems function, and the techniques developers can use to build these advanced systems.

What is Prompt Engineering 2.0?

The next step in human AI communication is Prompt Engineering 2.0. Understanding where this impact came from is essential to appreciating it.

Users found that the way a query or instruction was phrased had a significant impact on the quality of the response in the early days of big language models. Furthermore, depending on how the request was phrased, the same model may provide wildly different results. This insight led to the development of prompt engineering, a technological and creative field concerned with creating inputs that direct AI models to produce logical responses.

However, as AI became more capable, these prompts started to show their limitations. Traditional prompt engineering depended on static instructions. Each prompt acted independently, with no awareness of prior interactions or situational context. The AI responded only to what it could see in that single input, making it reactive, not adaptive.

That’s where Prompt Engineering 2.0 comes in. It’s not just about crafting better sentences; it’s about designing intelligent prompting systems. Moreover, it integrates dynamic context, memory retention, and multi step orchestration to create AI that understands who it’s talking to and why the user’s intent matters.

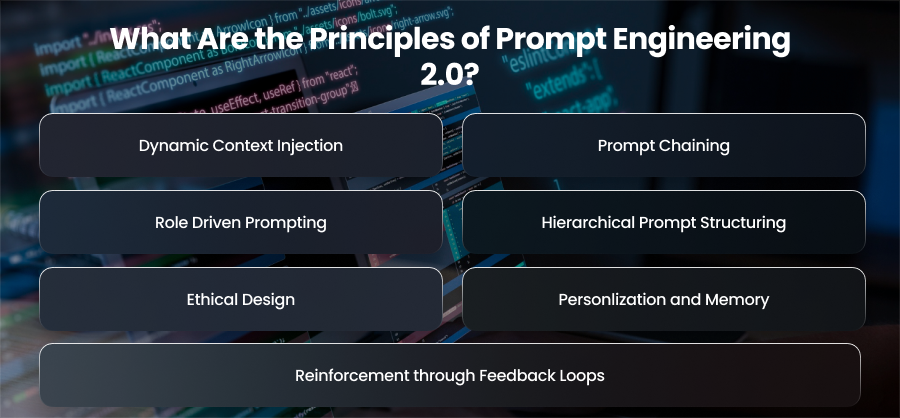

What Are the Principles of Prompt Engineering 2.0?

Prompt Engineering 2.0 represents a fundamental transformation in how we design interactions between humans and AI. Instead of viewing a prompt as a single input that triggers a single outputs and this new framework treats prompting as an ongoing dialogue system.

Dynamic Context Injection

In the early days of prompt engineering, every user interaction started from zero. AI models treated each prompt as a fresh input without recalling previous conversations. But with Prompt Engineering 2.0, context is no longer an afterthought.

Dynamic context injection means that prompts now evolve in real time based on relevant data. This data can come from previous user inputs or even external databases. The AI dynamically injects this context into its reasoning before generating an answer.

For example, a customer support AI doesn’t just respond to “I need help with my order.” It retrieves contextual data and uses it to craft a personalized response like, “ I see you ordered the Bluetooth headphones last week. Are you experiencing issues with that item?”

Dynamic context injection guarantees that AI outputs are user specific and based on reality. Additionally, it turns AI into a proactive assistant rather than a reactive chatbot.

Prompt Chaining

Many tasks are too complex to be sovled by a single prompt. Prompt chaining can be useful in this situation.

By using prompt chaining a large task may be divided into smaller, more manageable chunks. This results in a method or way of thinking that is similar to how individuals deal with challenges.

For example, an AI research assistant may write a business report like this:

- Obtain and compile the pertinent market data.

- Examine new trends and growth patterns.

- Based on those observations, write a succinct executive summary.

Every step is a unique stimulus created for a particular purpose and coordinated to reach a logical conclusion.

This method not only increases transparency but also accuracy because developers may examine and optimize each step separately.

Role Driven Prompting

A single AI model can behave differently depending on how it perceives its role. By assigning clear roles or identities through prompting, we can steer the model’s behavior, and reasoning style.

Furthermore, role driven prompting helps define:

- Perspective: How the AI interprets and frames the problem.

- Tone: The level of formality or simplicity in the language.

- Depth: The amount of detail the AI provides.

Roles can even change throughout a chat in Prompt Engineering 2.0. An AI may start out as a data collector, develop into a strategist, and end up writing reports in a single process. Because it reflects how human specialists change their viewpoints depending on the work, this flexibility enhances adaptability and engagement.

Hierarchical Prompt Structuring

Prompt Engineering 2.0 introduces hierarchical prompt structuring, where prompts are ogranized in layers that define goals and contextual references. This modular structure helps AI models navigate complex queries by breaking them into smaller and interlinked components. For instance, a context aware assistant might use a hierarchy where one layer defines user intent and another provides domain knowledge. Thus, this tiered strategy lessens cognitive noise and aids AI models in more efficiently prioritizing pertinent data.

Reinforcement through Feedback Loops

Feedback loops are essential to improving AI performance in Prompt Engineering 2.0. To improve subsequent encounters, user feedback is constantly incorporated into the system. The AI employs reward mechanisms to enhance itself, whether that means changing the tone for empathy or the wording for clarity. As a result, suggestions gradually become more in line with user preferences and contextual reality, resulting in a closed loop learning system.

Ethical Design

Ethics is no longer an afterthought, it’s a fundamental pillar of Prompt Engineering 2.0. Therefore, desiging context aware systems means being sensitive to issues such as bias and privacy. AI systems must produce replies that take into account cultural settings and user data. Additionally, ethical limitations are now explicitly included into the prompt design by AI developers, guaranteeing that the AI’s output is both accurate and socially responsible.

Personlization and Memory

A defining principle of this new generation of prompt engineering is personalization through memory. Context aware AI systems maintain a structured form of memory that helps tailor responses to each user’s preferences and needs. For example, an AI powered learning platforms can recall a student’s previous lessons and identify weak areas. This personalization transforms AI from a generic assistant into a continuously learning partner.

What Are Context-Aware AI Systems?

Context aware AI systems represent a major leap forward in AI, marking the transition from reactive models to trully intelligent and adaptive systems. These systems don’t just process raw inputs, they understand context. Context can mean a variety of things depending on the scenario: user input and past interactions. So, by taking these elements into account, context aware AI systems deliver responses that are more accurate.

In traditional AI systems, inputs are often treated in isolation, each question or prompt is processed as if it were entirely new. However, humans don’t communicate in a vacuum. Every statement we make is influenced by prior experiences and goals. Context aware AI systems mimics this human understanding by interpreting each user interaction within a broader informational frameworks.

Types of Contexts AI Can Understand

Context aware AI systems rely on multiple layers of context function effectively:

- Linguistic Context: Being aware of the relationships between words in a phrase. For example, differentiating between a bank as a riverbank and a bank as a financial entity.

- Situational Context: Identifying the context of the contact, such as distinguishing between a professional question and a casual talk.

- Temporal Context: Using time based data, such as recalling historical occurrences or spotting patterns across time.

- User Context: Factoring in user preferences and interaction history to personalize responses.

What Are the Techniques for Building Context Aware Prompt Systems?

Context aware prompt system development necessitates a careful fusion of language design and engineering. In order to create intelligent workflows where content and purpose are seamlessly incorporated into the prompt structure, these systems do more than just send instructions into an AI model.

Layered Prompt Structuring

Layered prompt structure, in which prompts are separated into several interconnected levels, is one of the most effective methods in context-aware prompt design. Every one of these levels has a distinct function.

A typical layered prompt may include:

- Instruction Layer: This layer defines what the AI must do.

- Context Layer: This layer provides relevant background or history.

- Constraint Layer: This layer set limits or style preferences.

- Feedback Layer: This layer incorporates results from previous outputs for refinement.

Developers may preserve clarity while giving the AI the depth of data it requires to provide replies that are logical and contextually appropriate by stacking these levels. This modular design is crucial when developing long running or multi turn conversations, where the AI must recall prior content while adapting to new input.

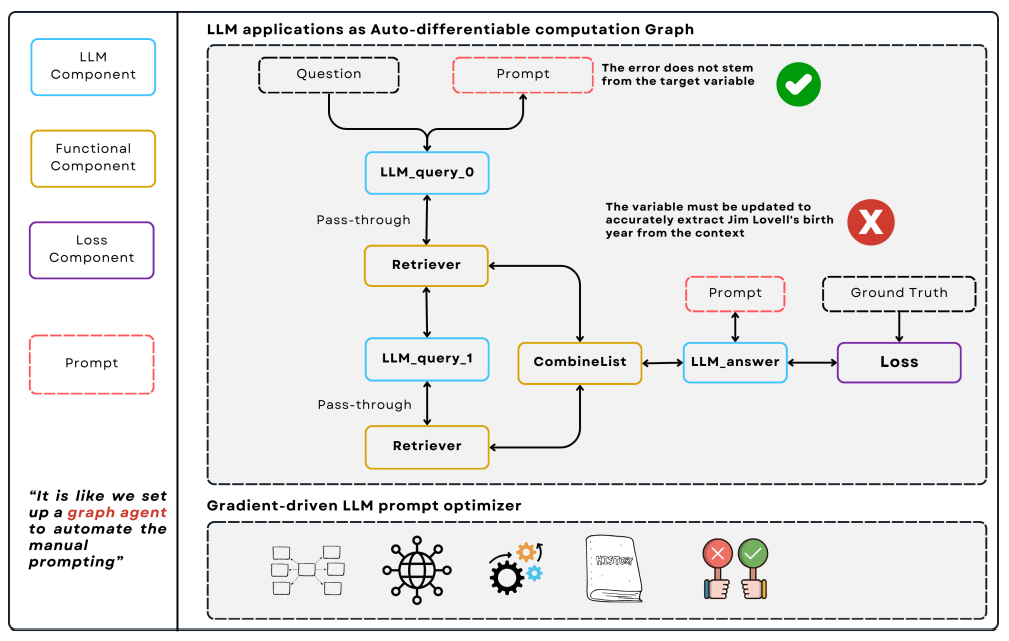

Embedding Context Retrieval

A major advancement in context aware systems comes from embedding-based retrieval techniques, where information from previous interactions or documents is stored as vector embeddings in a database.

When a user submits a new prompt, the system retrieves the most relevant contextual data by comparing vector similarities. This process enables semantic recall, the AI doesn’t just retrieve exact matches but understands related meetings.

For instance, if a user previously discussed project budget cuts, the AI can automatically recall that discussion when asked later about cost saving strategies, even though the exact wording differs. Tools like Chroma are often used to manage and query these embeddings efficiently, forming the backbone of RAG systems.

Memory Management and Stateful Prompts

Context aware systems depend heavily on stateful prompts, those that retain memory across interactions. These memories can be either short term or long term.

Short term memory allows an AI to hanlde ongoing discussions smoothly and recalling recent exchanges without explicit repetition. On the other hand, long term memory enables persistent personalization, like remembering a user’s name or previous topics.

Memory management techniques often use structured data storage systems, like vector databases or key value pairs, to efficiently recall relevant past information while preventing memory overflow or content drift. Effective management ensures that the AI remembers what’s essential while discarding irrelevant noise.

Dynamic Prompt Adaptation

A defining characteristic of Prompt Engineering 2.0 is the continous improvement cycle enabled by feedback loops. Context aware system monitor their own performance and capture user feedback, and adjust future prompts accordingly.

AI systems can adapt to the changing demands of users thanks to this feedback-driven improvement. For example, the system can learn to better reinterpret similar inquiries in the future if consumers often repeat a query because the AI misinterpreted it. These little changes over time produce a conversational answer that is more tailored and flexible.

Context Window Optimization

The context window in which the majority of big language models function restricts the amount of data they can handle concurrently. Developers employ context window optimization strategies to manage intricate interactions, guaranteeing that only pertinent information is entered into the prompt.

Common strategies include:

- Context Summarization: Compressing prior dialogue into concise summaries.

- Sliding Windows: Retaining only the most recent and relevant interactions.

- Hierarichal Memory: Storing detailed information externally and feeding summarized insights into the prompt.

Final Words

Prompt Engineering 2.0 changes AI interactions where computers can understand context and intent in addition to basic orders. Thus, context aware AI systems that incorporate adaptive design provide tailored experiences that bridge the gap between human communication and machine comprehension.