According to statistics, the Edge AI market is estimated to reach $269.82 billion in the next six years. On the other hand, the cloud AI market is projected to grow to $589.22 billion in the same time. AI has quickly evolved. However, one of the biggest transformations in the field isn’t just about smart algorithms; it’s about where those algorithms run.

For years, the cloud served as the backbone of AI innovation by powering everything from enterprise analytics to intelligent apps. However, now that the world has become more connected, and devices produce massive amounts of data every second, the cloud alone is no longer enough.

This is where Edge AI can help. It’s an approach where data processing and AI inference happen directly on local devices.

In this guide, we’ll discuss both Edge and Cloud AI and highlight their differences. We will also discuss their applications and implementation challenges.

Edge AI

Edge AI enables AI inference at the device level. The trained model may be created in the cloud, but once optimized and deployed, it runs locally on specialized chips such as:

- Neural Processing Units

- Graphical Processing Units

- Tensor Processing Units

- Low-power microcontroller

- AI accelerators designed specifically for real time processing

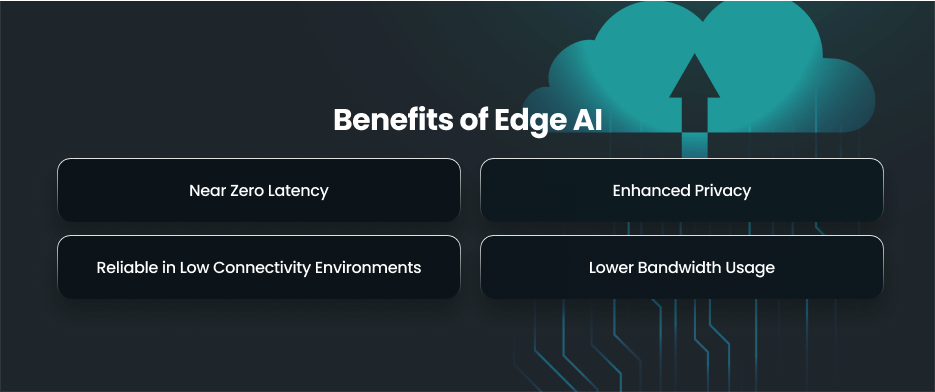

Benefits of Edge AI

Near Zero Latency

Since the data doesn’t travel to the cloud, decision making happens instantly. This is crucial for autonomous vehicles and medical devices.

Enhanced Privacy

This reduces the possibility of security breaches and encourages regulatory compliance as data stays on the device.

Reliable in Low Connectivity Environments

Edge AI works even when devices are offline or in remote areas with unstable networks.

Lower Bandwidth Usage

Only relevant insights are sent to the cloud, reducing network loads and costs.

Cloud AI

Cloud AI is the traditional model where data is sent to centralized cloud servers for storage and processing. Major providers like AWS offer scalable tools and computing power for machine learning and large scale AI applications.

Moreover, cloud AI excels in tasks requiring immense computational resources:

- Training large neural networks

- Handling high volume analytics

- Running complex NLP and generative AI models

- Managing massive datasets

Furthermore, cloud environments offer distributed computing and extensive storage. This makes them ideal for advanced AI workloads.

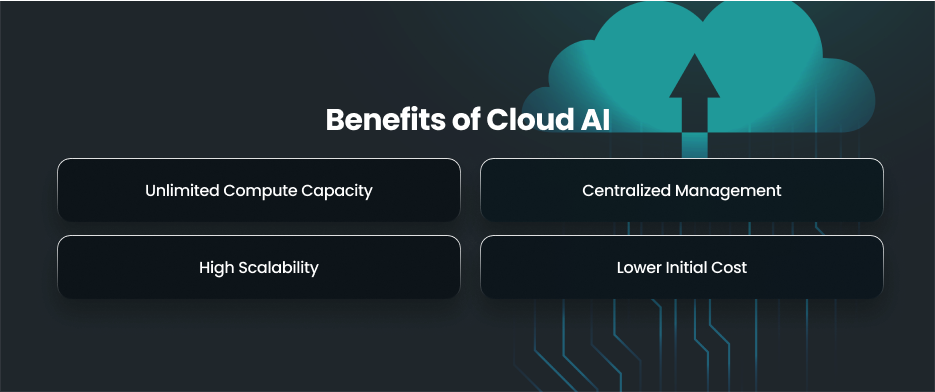

Benefits of Cloud AI

Unlimited Compute Capacity

Cloud platforms provide the power needed for heavy duty model training and large dataset processing.

Centralized Management

Model updates and system maintenance happen in one place. This makes it easier to maintain accuracy and performance.

High Scalability

Cloud systems can scale up or down instantly based on user demand.

Lower Initial Cost

Businesses can start using AI with minimal investment by paying only for what they use.

Differences Between Edge and Cloud AI

Latency

One of the most important considerations when choosing between Edge and Cloud AI is latency. Data is processed by Edge AI right on the device that generates it. Responses are therefore very instantaneous. For applications like driverless automobiles, where even a few milliseconds of delay might have fatal results, this feature is crucial.

Data must be sent to centralized servers for processing before being sent back to the device in order to use cloud AI. While this is sufficient for tasks like document translation or large scale data analysis.

Connectivity Requirements

Another major difference lies in connectivity. Because processing takes place locally, Edge AI may function well even in low or sporadic contexts. Mobile devices and rural locations that don’t always have reliable internet connectivity will find this very helpful.

Cloud AI is highly dependent on a reliable internet connection. Unreliable networks might impede AI performance. It can increase processing times in real time applications. Because of this, Edge AI is more resilient to connectivity problems. Cloud AI works best in environments with consistent network availability.

Data Security

Data security is another key differentiator. Edge AI keeps sensitive information on the device itself. This minimizes exposure to external threats and making it easier to comply with strict data protection regulations. Applications handling personal health information can benefit significantly from this local processing.

However, Cloud AI involves transmitting and storing data on remote servers. Even with reliable security measures, sending data back and forth introduces additional risk. This makes cloud only deployments less desirable for highly sensitive information unless paired with strict compliance protocols.

Computational Power

The available computational resources differ substantially between Edge and Cloud AI. Edge devices have limited processing power and memory. This requires AI models to be optimized for efficiency. These devices often rely on specialized hardware like GPUs or low power AI accelerators.

Cloud AI can utilize a tremendous amount of computational resources. This includes high performance GPUs and distributed computing systems. This makes the cloud ideal for training complex neural networks and performing large scale AI inference tasks that would be impossible on edge devices alone.

UpdatesDeployment

Scalability is another area where Edge and Cloud AI diverge. Scaling Edge AI involves deploying physical devices across multiple locations, which can be complex and resource intensive.

Conversely, Cloud AI offers almost limitless scalability with minimal setup. In order to meet user demand or data expansion, resources may be instantaneously raised or lowered. This makes cloud AI particularly appropriate for worldwide applications and services that need to handle variable workloads or quickly shifting user bases.

Cost Structure

The financial models for Edge and Cloud AI differ significantly. Edge AI typically requires higher upfront investment due to the need for specialized hardware and on device AI chips. However, ongoing operational costs may be lower because local processing requires bandwidth use and cloud service fees.

On the other hand, Cloud AI allows organizations to start with minimal capital investment thanks to pay as you go models. While initial costs are lower, operational expenses can increase rapidly with heavy computation and large data storage.

Updates

Updates and maintenance differ between the two methods as well. Distributing model updates across several devices is a logistical challenge for Edge AI. This ensures consistency and synchronization across devices adds operational complexity.

Cloud AI simplifies this process because models and updates are centralized. Any modification made on the server instantly applies to all users. This makes maintenance and performance monitoring far easier compared to distributed edge systems.

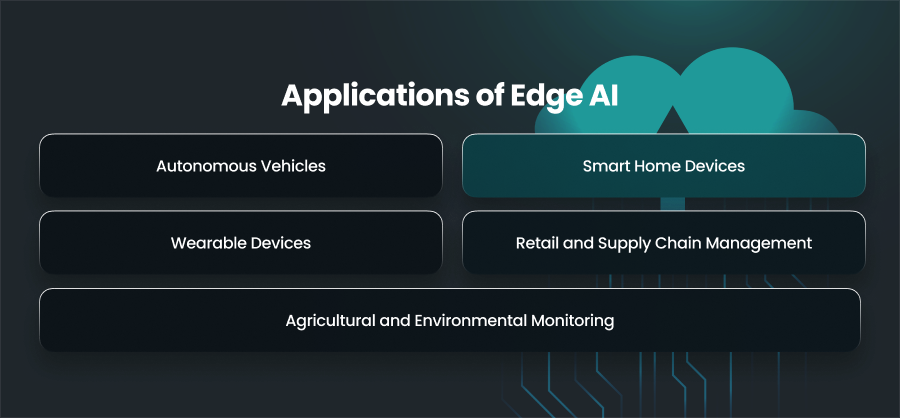

Applications of Edge AI

Autonomous Vehicles

One of the most well known applications of Edge AI is in autonomous cars. For self driving cars to travel safely, massive volumes of sensor data must be analyzed in real time. Information streams produced by cameras and LiDAR require immediate analysis. Edge AI allows vehicles to detect obstacles and monitor lane positions.

Smart Home Devices

Edge AI is becoming more and more common in consumer devices, particularly in smart homes. On device intelligence is used by gadgets like voice assistants and home automation systems to respond more quickly and protect privacy. For example, a smart camera does not need to send data to the cloud in order to detect motion or recognize odd activities. Homeowners benefit from increased security and quicker notifications as a result.

Wearable Devices

Another industry where Edge AI is being really helpful is healthcare. Edge AI is used by wearable technology, such as heart rate monitors and fitness trackers, to track patients’ health in real time. These devices can identify irregularities and notify users of possible health problems by doing local data analysis. For instance, without waiting for cloud processing, a wearable cardiac monitor may detect abnormal heartbeats instantly and send out an emergency signal.

Retail and Supply Chain Management

By allowing real time insights at the source, edge AI is transforming supply chains and retail businesses. Shop sensors and smart cameras can monitor consumer behavior and identify inventory shortages. This allows retailers to restock products proactively and optimize store layouts.

In supply chain management, edge enabled sensors on warehouses and logistics equipment can monitor inventory levels.

Agricultural and Environmental Monitoring

Edge AI is helping agriculture by making real time environmental monitoring and precision farming possible. Soil conditions and irrigation requirements may be instantly assessed by drones and field sensors with edge processing capabilities. Without uploading massive statistics to online servers, farmers can optimize fertilizer use and identify illnesses or pests early. In a similar vein, environmental monitoring stations employ Edge AI to observe animal behavior in real time or detect pollution levels.

Applications of Cloud AI

AI Model Training

The training and creation of complex AI models is one of the main uses of cloud AI. It takes a lot of computing power and access to big datasets to train deep neural networks or natural language processing models. The GPUs and distributed computing infrastructure required to effectively handle terabytes of data are provided by cloud AI platforms. For example, it would be very hard to train a generative AI model on a local device that could produce high quality text or graphics.

Enterprise Analytics

Cloud AI makes it possible for businesses to gather and examine enormous datasets from many sources, which fuels corporate analytics. Global insights from consumer behavior and financial transactions can be found by centralized AI systems. Predictive projections and actionable insights may be produced very instantly using cloud analytics tools. A global retail chain, for instance, can utilize Cloud AI to customize inventory plans by analyzing sales data from hundreds of locations.

Generative AI

Advanced NLP services and generative AI tools have grown quickly thanks to cloud AI. To interpret intricate language models and provide precise answers, chatbots and content creation systems depend on the cloud. Because these models need a lot of processing power and constant updates, centralized cloud processing is crucial. For example, while the cloud makes sure that the underlying AI models are always evolving, virtual customer support representatives may offer tailored replies in real time.

Fraud Detection

Cloud AI is widely used to improve cybersecurity and identify fraudulent behavior. Cloud based AI models are used by financial institutions and digital payment systems to track transactions and instantly identify any risks. These systems can examine activity across several accounts and uncover anomalies that would be difficult to spot locally. Cloud AI guarantees centralized security threat monitoring, enabling businesses to react swiftly.

Global Retail

Because it powers inventory management and tailored suggestions, cloud AI has transformed the retail and eCommerce sectors. Millions of consumers’ surfing and purchase patterns are analyzed by centralized AI algorithms. For example, eCommerce behemoths optimize supply chains using predictive analytics and employ Cloud AI to provide real time product recommendations.

Challenges in Implementing Edge and Cloud AI

Infrastructure Limitations

Both Edge and Cloud AI require strong infrastructure foundations, but in different areas. Because most calculations and data storage take place remotely, cloud AI requires steady network access and high speed internet connectivity. Cloud-based AI systems may experience delays or decreased accuracy in areas with poor connection or unreliable networks.

Local hardware capabilities play a major role in Edge AI. Edge devices must have enough processing power and memory to handle advanced AI models.

Latency Challenges

While Edge AI is designed to reduce latency by processing data locally, maintaining real time performance across distributed edge devices can be challenging. Edge devices may operate in environments with fluctuating power supply or physical wear.

Cloud AI can struggle with latency because data must travel back and forth between devices and remote servers. This communication can be problematic for time sensitive use cases.

Integration with Legacy Systems

Integrating modern AI technologies with old systems is one of the biggest adoption barriers. It is challenging to enable advanced AI capabilities since many businesses still employ outdated hardware and monolithic software structures. Moving these systems to cloud platforms requires a great deal of planning and effort.

Similar to this, modifying industrial or legacy machinery to run Edge AI workloads sometimes calls for specialized sensors or middleware solutions, all of which increase complexity and expense.

Final Words

Edge AI and Cloud AI each bring powerful capabilities to modern technology. They balance real time processing with large scale intelligence. Automation and analytics are being shaped by their combined powers. Businesses may create more intelligent and effective AI solutions by comprehending their distinctions and uses.