The AI market is expected to grow to $4.8 trillion over the next seven years, according to UN Trade and Development Authority. This is because companies increasingly give AI top priority. Businesses are investing heavily in artificial intelligence to automate processes. However, many projects never go past the testing stage.

You’ll often hear stories about impressive AI demonstrations or very accurate machine learning models that never produce final products. The gap between AI experiments and AI products is one of the biggest problems confronting businesses.

Thus, we will go over what AI experiments and products actually are and how they vary from one another in this guide.

AI Experiments

AI experiments are the earliest and most exploratory phase of an organization’s artificial intelligence journey. Teams are not attempting to create a final product at this point. Rather, they are concentrating on determining if AI is worth investing in and whether it can actually address a certain issue.

Furthermore, an AI experiment is essentially a learning exercise. It helps teams reduce uncertainty before committing resources to full scale development. These experiments are often led by data scientists or research focused teams and are usually conducted in controlled and low risk environments.

Common forms of AI experiments include proofs of concept and algorithm comparisons. It also includes internal prototypes and feasibility studies. Moreover, they typically live in notebooks or temporary cloud setups rather than production systems.

Characteristics of AI Experiments

Exploratory and Short Term

AI experiments are designed to be fast and iterative. Teams test hypotheses, tweak parameters, and try different approaches without worrying about long term maintainability.

Limited and Controlled Data

Experiments often rely on small and curated datasets. These datasets may be historical or cleaned extensively to remove inconsistencies that would otherwise complicate early testing.

Focus on Model Metrics

Evaluation is primarily based on technical performance indicators such as accuracy, precision, recall, loss functions, or confusion metrics. Moreover, broader system level concerns are usually overlooked.

Minimal Engineering Overhead

Code quality and security are not top priorities. Scripts may be tightly coupled or written quickly to validate ideas rather than endure long term use.

Low Operational Risk

Since AI experiments are not exposed to end users or critical systems, failures are acceptable and even expected. This freedom allows developers to explore creatively.

Tools and Environments

AI experiments commonly use tools such as:

- Jupyter or Colab notebooks

- Python libraries like TensorFlow

- Local machines or limited cloud resources

- Sample databases or static CSV files

AI Products

AI products are production ready solutions that use artificial intelligence to deliver consistent and real world environments. Unlike AI experiments, AI products are built for reliability and long term use.

An AI product is not just a trained model. It is a complete system where AI is embedded into business processes or operational workflows. Data pipelines and governance mechanisms are all part of the product, even if the model is the brain.

Characteristics of AI Products

Built for Real Users

AI products are designed with end users in mind. Moreover, the outputs must be understandable and actionable. Raw predictions alone are rarely sufficient.

Production-grade Reliability

AI products must function consistently in real world conditions. This means handling inadequate data. It also means managing infrastructure issues without disrupting company operations.

Scalable Architecture

As usage grows, AI products must scale efficiently. This involves optimized compute resources and performance tuning to ensure speed.

Continuous Improvement

AI models degrade over time due to changes in user behavior. AI products include mechanisms for performance tracking and improvement to maintain accuracy and relevance.

Integrated Into Existing Systems

The majority of AI products don’t function independently. To fit easily into corporate operations, they link with databases or CRM systems.

Components of a Typical AI Product

An AI product is made up of multiple interconnected components, including:

- Data ingestion pipelines to collect and process data in real time or batches

- Model serving layers that expose predictions through APIs or services

- Application logic that translates predictions into actions

- User interfaces that present insights or recommendations clearly

- Systems for tracking performance

- Layers of security and compliance to safeguard information

Differences Between AI Experiments and Products

| Aspect | AI Experiments | AI Products |

| Purpose | Exploration and validation. | Outcome driven. |

| Data Scope & Complexity | Small, curated, clean datasets. Often static or historical. | Dynamic and real world datasets. Handles missing values and real time streams. Complies with privacy and regulatory requirements. |

| Evaluation Metrics | Model centric metrics like accuracy or loss functions. | System level and business metrics: uptime, user adoption, and business KPIs |

| Infrastructure & Scalability | Local machines or temporary cloud environments. Limited scalability. | Production grade infrastructure with auto scaling and cost optimization. |

| Code Quality | Quick scripts and minimal documentation. Tightly coupled code. | Modular and production ready code. |

| User Experience | Raw outputs intended for technical audiences. | Clear and actionable insights with intuitive UI and consistent behavior to gain user trust. |

| Risk & Accountability | Low stakes. Failures are contained. | High stakes. Incorrect predictions or bias can cause financial or legal issues. |

| Maintenance & Ownership | Typically short lived; ends after insights are gathered. | Continuous monitoring and feature updates are required. |

| Team Involvement | Small and specialized teams. | Cross functional teams including data scientists, developers, product managers, and MLOps. |

What is the Role of Developers in Turning Experiments into Products?

Production Ready Architecture

One of the primary responsibilities of AI developers is designing production ready architectures. Experimental AI code often runs in isolated notebooks or temporary cloud environments. These environments are unsuitable for live and high volume usage. Developers take these prototypes and transform them into reliable systems.

This includes building APIs to serve model predictions and integrating databases for live data. It also includes automating backend workflows and implementing security and authentication layers.

Implementing MLOps Practices

Another crucial responsibility is implementing MLOps practices. MLOps ensures AI systems are not one off experiments but sustainable products that can change over time. To verify system behavior, developers use automated testing and build up development pipelines for both code and models. Additionally, they are in charge of developing monitoring pipelines to check data quality and model performance. They also look after model versioning and rollback procedures.

Monitoring

AI tools must perform reliably in dynamic environments. To continually monitor model outputs and system health, developers use monitoring systems. They identify irregularities and send out notifications for possible problems. For AI models to continue producing correct results over time, proactive monitoring is crucial.

Collaboration Across Teams

Developers act as a bridge between data science, product management, operations, and business teams. They translate experimental models into features that meet user needs and business objectives. Determining product needs with product managers and optimizing deployment with data scientists are examples of effective teamwork.

Optimization

Additionally, developers concentrate on making AI systems more efficient and economical. Production grade AI must produce findings quickly without incurring high computational or storage expenses. In order to balance speed and scalability, developers also adjust models and manage infrastructure resources.

Why Many AI Experiments Never Become AI Products?

Lack of Clear Business Alignment

One of the most common reasons AI experiments fail to become products is the absence of a well defined business objective. Rather than being driven by an urgent commercial requirement, many experiments are started out of curiosity or a passion for technology. The model lacks a specific use case and success metric, yet it may function well in theory. Therefore, in the absence of clear alignment with business goals, it is sometimes difficult for leadership to prioritize production or justify more expenditure.

Poor Data Readiness

Data issues are a major barrier to AI productization. Experiments often work with limited or manually prepared datasets. However, AI products require consistent and governed data pipelines. Inadequate data governance and missing data can completely stop progress. Even the most promising AI models cannot function in production without a solid data infrastructure.

Lack of MLOps Maturity

Many AI teams are strong in data science but lack software engineering and MLOps capabilities. Scalability and security are rarely considered while writing experimental programs. As a result, when it comes time to launch, teams find they have to rebuild large portions of the system. Without automated deployment pipelines and version control, AI systems become fragile and difficult to maintain.

Organizational Silos

AI product development requires close collaboration between different teams. However, many organizations operate in silos with limited communication. Data scientists may deliver a model without understanding product constraints, while engineering teams can lack context about model behavior.

Ethical Concerns

Ethical and legal issues become inevitable as AI systems get closer to consumers. During experimentation, bias explainability and compliance criteria are frequently disregarded. Organizations may choose that the risks of deployment exceed the advantages when these problems eventually arise.

Lack of Ownership

AI experiments are often treated as short term initiatives, with no clear owner responsible for long term success. However, AI products require ongoing monitoring and support. When ownership is unclear model can quickly become outdated or unreliable.

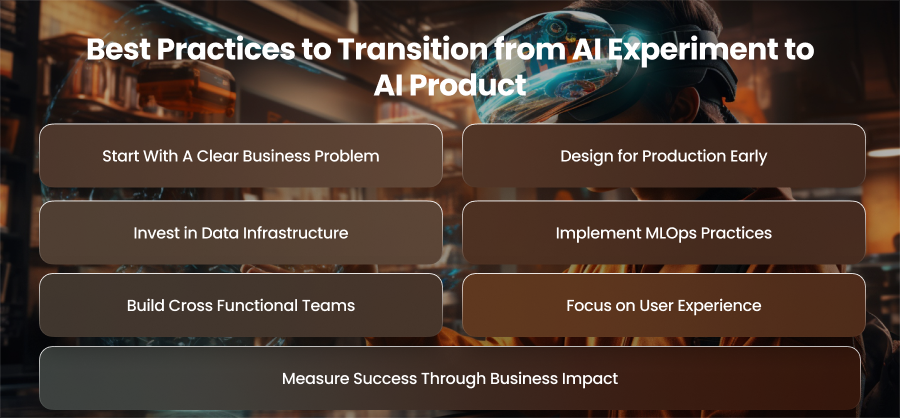

Best Practices to Transition from AI Experiment to AI Product

Start With A Clear Business Problem

A precise and quantifiable business challenge must be identified before any AI project can be scaled. Technical viability is frequently the exclusive focus of experiments, but products need to address an issue that adds value. Therefore, clearly identifying the target users and the decision or process AI will influence.

Design for Production Early

Even during the experimentation phase, it’s important to consider production requirements from the start. This includes thinking about data pipelines and deployment constraints.Additionally, planning with production in mind enhances the possibility that trials may seamlessly transition into working goods and minimizes rework later on.

Invest in Data Infrastructure

Strong data infrastructure is necessary for dependable AI solutions. Systems for gathering and processing data that guarantee excellent quality and uniformity across sources are included in this. Proper data pipelines also handle transformations and retrieval efficiently. Organizations should also enforce governance and compliance standards at this stage to ensure data readiness for production.

Implement MLOps Practices

MLOps is crucial for sustaining AI products. For model deployment and training, teams should set up automated processes. Model versioning and automated testing are two MLOps techniques that guarantee models’ accuracy and dependability over time.

Build Cross Functional Teams

Data scientists, product managers, MLOps teams, and business stakeholders must work together to turn trials into products. Cross-functional communication guarantees that the product satisfies technical specifications and works with current processes. Silos between teams often lead to poor productization, even in cases when an experiment is technically sound.

Focus on User Experience

AI products are used by humans, so user experience is critical. Interfaces that offer forecasts, practical suggestions, and an explanation of AI-driven choices should be developed by developers and designers. Adoption is ensured by fostering trust by openness and dependability.

Measure Success Through Business Impact

AI product success should be measured by real world impact rather than just model accuracy. Revenue growth and improved operational efficiency are two examples of metrics. Therefore, ensuring that evaluation measures are in line with corporate goals guarantees that AI solutions provide real and long lasting value.

Final Words

AI experiments reveal what’s possible, but AI products deliver real business value. So, bridging this gap requires strong engineering and reliable data. Organizations that focus on product thinking and developer led execution are far more likely to turn promising AI ideas into effective solutions.