According to Hostinger, the LLM market will grow to $82.1 billion in the next eight years. Furthermore, LLM adoption reaches 67% as organizations embrace generative AI. Moreover, LLMs are transforming the way businesses and developers build intelligent applications. From chatbots that can engage in human like conversations to powerful tools for summarization and content generation.

A popular framework for creating APIs and implementing machine learning models, FastAPI is a high performance Python framework. It is the ideal option for transforming your LLM into a production-ready service because of its asynchronous features and smooth interaction with Python modules.

Everything you need to know to install an LLM using FastAPI will be covered in this article, from environment setup to cloud scaling and performance optimization.

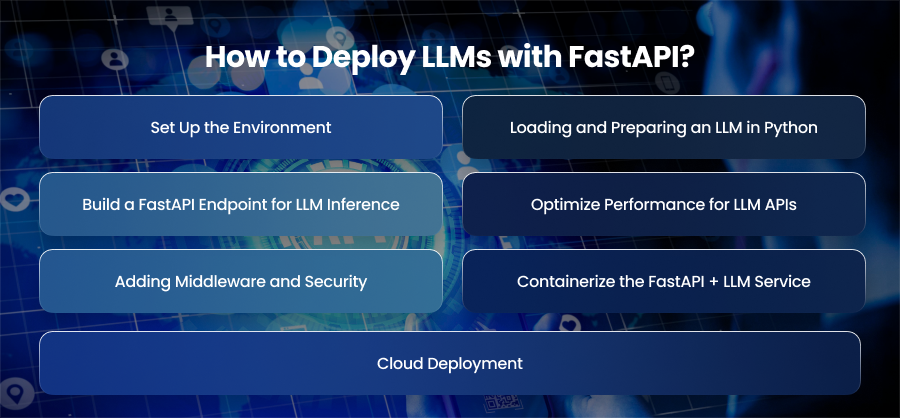

How to Deploy LLMs with FastAPI?

Set Up the Environment

Before coding your deployment pipeline, it’s essential to create a clean and reliable environment. Since many contemporary ML libraries demand more recent versions, you should begin by making sure that Python is installed on your computer. Next, you should create a virtual environment to keep your dependencies distinct and manageable and to prevent issues with other projects.

After that, you must install the following essential packages: Uvicorn as the ASGI server to run your application and FastAPI for creating APIs. Additionally, you require Hugging Face Transformers for model loading and Pydantic for request and response validation.

Finally, organize your project into a logical folder structure, for example, and an app directory containing your FastAPI application files and utility scripts, along with a requirements.txt file to record dependencies.

Loading and Preparing an LLM in Python

With the environment ready, the next step is to load your large language model. Hugging Face’s Transformers library simplifies this process by providing customers with access to thousands of pre-trained models. For instance, if you have sufficient hardware resources, you may start with GPT-2 for little tests or go for larger models like GPT-4 or LLaMA. Moreover, loading a model involves initialization a text generation pipeline and text decoding in one convenient function.

After the model has been loaded, it is important to do a quick inference to confirm that it is generating accurate outputs. Models run faster on GPUs, although CPUs could be sufficient for lesser applications. At this stage, you should also consider your hardware setup. If memory is a limitation, you may also look at quantization techniques that improve the computational efficiency of model weights while decreasing their accuracy. Also, preparing the model carefully ensures your FastAPI endpoints will perform reliably once deployed.

Build a FastAPI Endpoint for LLM Inference

Creating an API endpoint that enables other users to interact with the model comes next, once it has been loaded. FastAPI, which provides an intuitive syntax for handling requests and creating routes, makes this simple. You start by defining a request model using Pydantic and creating an instance of a FastAPI application. This model specifies the format of the input data, including the prompt and the maximum text length.

A POST endpoint, like /generate, then takes the request and does inference on the model before returning the generated content in JSON format. Once your app is complete, you can publish it to a local URL and start it with Uvicorn. Additionally, as FastAPI automatically creates interactive documentation at /docs, you may test the endpoint in your browser without the need for any other tools.

Optimize Performance for LLM APIs

Performance optimization is crucial when supporting large language models, especially if you expect high traffic or real time responses. Using asynchronous endpoints, which enable the server to process several requests at once, is among the most straightforward enhancements. Another useful strategy for situations with high workloads is batching requests, which enables the model to evaluate several prompts in a single forward pass. Especially for chatbots, streaming replies token by token may greatly enhance user experience if your application has to generate lengthy outputs.

Hardware wise, using GPUs may significantly cut down on inference time, and methods like quantization or mixed precision can increase model efficiency without significantly compromising quality. In some cases, specialized inference frameworks like vLLM or Hugging Face’s Text Generation Inference can further boost throughput.

Adding Middleware and Security

Reliability and security must be considered in the design of any API that supports an LLM. You may add additional levels of functionality, like logging requests, performance monitoring, or gracefully managing failures, with middleware in FastAPI. Deploying public endpoints should prioritize security. Implementing API key authentication is a typical initial step to ensure that only authorized users can access the service.

Rate restriction is also crucial since it stops malevolent people from sending too many requests to your system. By limiting access to your backend to trusted frontends, Cross-Origin Resource Sharing setup helps you avoid being exposed to undesirable traffic. In addition to aiding in debugging, logging requests and answers provides insightful information about usage trends.

Containerize the FastAPI + LLM Service

Containerization is the next step when the FastAPI model and service are operational locally. If your service is packaged in a Docker container, it will operate reliably whether it is operating on a local computer or a cloud server. You also utilize Uvicorn to specify the base image in a Dockerfile, install the required dependencies, and define the startup command. You may run the container locally after generating the image to make sure everything functions as it should. Additionally, because identical containers may be duplicated across numerous servers, containerization facilitates scalability in addition to improving deployment predictability.

Cloud Deployment

With your service containerized, you are ready to deploy it to the cloud. Several cloud platforms offer GPU backed instances, which are ideal for running LLMs. Moreover, AWS provides options like EC2 for virtual machines and SageMaker for managed machine learning workflows. Google Cloud offers Cloud Run for serverless deployment and Vertex AI for advanced model hosting. Azure also provides scalable installations using Kubernetes Service and Azure ML.

Pushing your Docker image to a container registry is the standard deployment process. After that, it is loaded into the intended cloud instance and operated for high availability behind a load balancer. If auto scaling is enabled, your service may adjust to demand automatically. This ensures consistent performance at no additional cost.

Best Practices for Deploying LLMs with FastAPI

Monitor Performance and Resource Usage

LLMs need a lot of computation, and hardware and workload can affect how well they function. Slow response times or high resource use could go unreported until they affect consumers if effective monitoring isn’t in place. Additionally, Grafna for visualization and Prometheus for metrics collecting are great options for monitoring system health. You should measure key metrics such as response latency and memory consumption.

Implement Caching for Frequent Queries

In many real-world applications, users send repetitive prompts. For example, a customer service bot may receive the same set of FAQs daily. Instead of recomputing results every time, implement caching to store frequently generated outputs. So, by using in memory databases such as Redis or FastAPI’s built in caching mechanisms, you can reduce inference costs and significantly improve response times.

Use Model Versioning

Over time, you may fine-tune models, switch to more efficient architectures or introduce new training data. To keep your models flexible and stable, you must version them. You may A/B test new models and go back to a stable version in case of problems by maintaining numerous model versions on hand. Using your FastAPI API to disclose the model version allows clients to specifically select which version to call, which is a good practice.

Secure Your Endpoints

Security is essential when creating publicly accessible APIs. Always use HTTP encryption to protect data in transit and prevent unauthorized interception. You should also use OAuth2 authentication to safeguard your endpoints so that only reliable clients may access your service. By limiting the quantity of requests per client, rate limiting is an additional line of protection that guards against DoS assaults and abuse. Early detection of fraudulent activity can also be aided by auditing API usage and recording access patterns.

Balance Performance with Cost Efficiency

Running LLMs can become expensive, particularly with large models on GPU servers. To maintain cost efficiency or quantization techniques that reduce computational overhead without drastically affecting output quality. You can also adopt smaller models for lightweight tasks and reserve larger models for premium or complex use cases. Paying for exactly the resources you use during periods of high demand is ensured by using cloud autoscaling.

Enabling Logging and Error Handling

Debugging and sustaining production systems require thorough logging and error handling. Additionally, you may record information about requests, responses, and failures using FastAPI and incorporate middleware for organized logging. In addition, always return meaningful error messages to clients while masking sensitive internal details. Also, proper error handling prevents silent failures and makes troubleshooting easier. This ensures your service remains reliable over time.

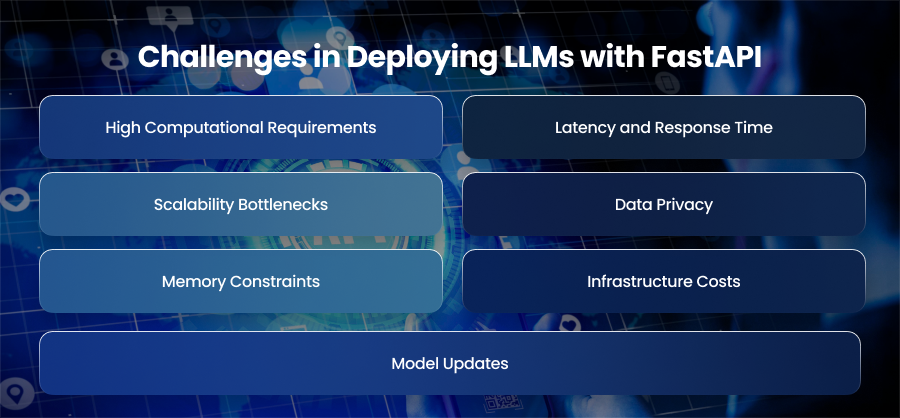

Challenges in Deploying LLMs with FastAPI

High Computational Requirements

LLMs demand significant computing power, especially during inference. Running them on CPUs can result in long response times, while GPUs are expensive and not always readily available. This creates a barrier for smaller teams or organizations with limited infrastructure budgets. Careful management of resource allocation is also required. If not, a lot of requests at once might overload the hardware and cause performance issues.

Latency and Response Time

Users expect fast and real-time interactions from applications powered by LLMs, but achieving this is often difficult. Large models can take seconds or even longer to process queries, depending on hardware and optimization. In production, slow responses can frustrate users and reduce adoption rates. Developers must find a balance between model size and infrastructure costs to ensure acceptable response times.

Scalability Bottlenecks

Scaling a FastAPI service for high demand is not always straightforward. Due to their resource intensive nature, LLMs do not scale linearly with additional hardware like smaller machine learning models do. Additionally, horizontal scaling frequently encounters problems with session state management. It also necessitates careful load balancing. Vertical scaling also has disadvantages. Scalability is therefore one of the primary obstacles to large scale LLM implementation.

Data Privacy

Exposing an LLM through FastAPI means handling user queries that could include sensitive information. Therefore, APIs may be susceptible to fraudulent inputs or illegal access if they are not sufficiently protected. Additionally, processing user data securely is essential since many firms must comply with data rules. Although they increase complexity, strict data rules and encryption are also required to avoid legal and reputational problems.

Memory Constraints

Many LLMs contain billions of parameters, making them extremely large in terms of storage and memory usage. Deploying them in production often requires specialized hardware with sufficient GPU VRAM or high RAM availability. Even so, it might be difficult to fit models into memory while supporting several users at once. Methods like distillation or model sharding might be useful, but they introduce further levels of complexity.

Infrastructure Costs

Running LLMs in production environments is expensive. Costs accumulate from GPU instances, memory, and additional services like monitoring and scaling solutions. Without careful cost management, teams may find their infrastructure bills skyrocketing. Balancing the need for performance with financial sustainability is a constant challenge for organizations deploying LLMs through FastAPIs.

Model Updates

Keeping models updated is critical, but swapping models in production is not trivial. Newer versions may require retraining or updated dependencies. Inadequate update management might result in unreliable user experiences or outages. Reliability and long term maintainability need the implementation of a robust versioning mechanism, which increases operational complexity.

Final Words

Although deploying LLMs using FastAPI offers speed and flexibility, there are disadvantages as well, such as performance and security needs. By following best practices and deftly handling deployment challenges, developers may produce reliable and effective APIs that offer real value and sustain long-term operational success.