In the past two years, 71% more people have been using generative AI, according to McKinsey & Company. This is due to the fact that LLMs like GPT-4 have revolutionized the development and scaling of AI powered businesses. From AI assistants that answer customer questions to advanced analytics tools, LLMs are assisting companies and startups alike in launching contemporary goods faster than ever before.

However, introducing new features and integrating with an API are only one aspect of developing an AI product. It also involves carefully transitioning from an MVP to a long-term solution that can manage growing demand and provide consumers with steady value.

In this guide, we will discuss the entire journey of AI product development, from defining your use case and creating your MVP to iterating based on feedback.

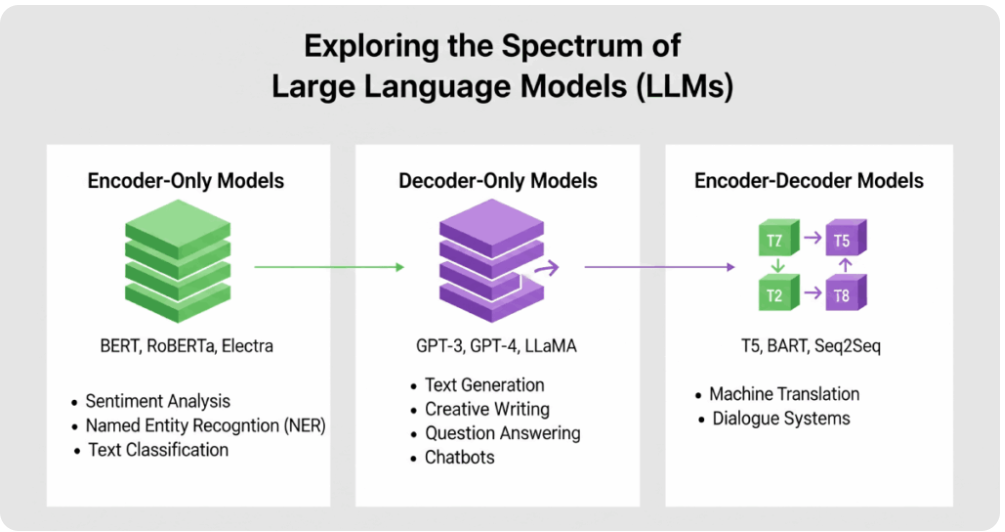

What are LLMs?

LLMs are a type of artificial intelligence designed to process and generate human like language. They can also identify patterns and meaning in text since they have been trained on massive datasets.

Transformer designs, which are fundamental to LLMs, are excellent at processing sequential data, such as text. Additionally, this design enables models to:

- Recognize the context of lengthy texts.

- Using the words that have come before it, predict the next word in a phrase.

- Adjust to various tenors, styles, and subject areas.

You can think of an LLM as a highly advanced autocomplete system. When given a prompt, it predicts the most likely next words and can generate coherent paragraphs and write code.

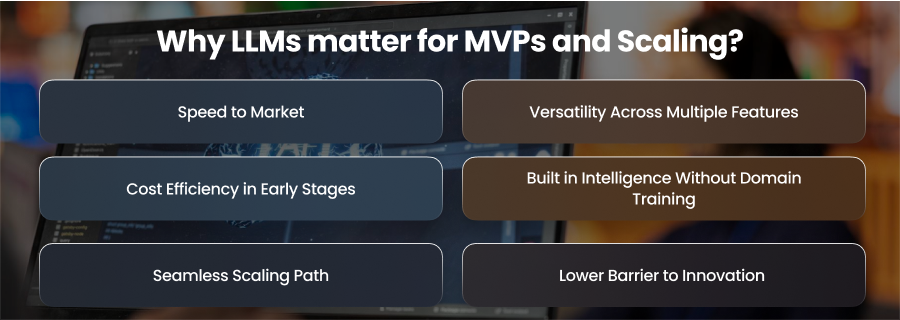

Why LLMs matter for MVPs and Scaling?

Speed to Market

Your most precious resource during the MVP phase is time. Therefore, you can improve or change your concept more quickly if you can validate it with actual people.

- LLMs enable plug and play AI by offering pre trained intelligence accessible via APIs.

- Instead of spending months on data collection and model training, you can integrate Claude or similar models and have a working prototype almost immediately.

For example, a startup building a legal document summarizer can launch in a few weeks by using a general purpose LLM and focusing only on UI/UX, rather than developing NLP capabilities from scratch.

Versatility Across Multiple Features

One of the most remarkable aspects of LLMs is their flexibility. A single model can power multiple product features without requiring separate architectures.

- Customer support automation

- Marketing content creation

- Internal knowledge base search

- Code assistance

This versatility means that in the scaling phase, you can add new capabilities without starting over technically.

Cost Efficiency in Early Stages

For an MVP, minimizing infrastructure cost is crucial. LLMs offer a pay as you go models when accessed via APIs, allowing you to spend only on actual usage.

- No upfront investment in GPUs or complex ML pipelines.

- Lower maintenance burden compared to hosting your own models early on.

Furthermore, as your product scales, you can transition to self hosted or fine tuned models for cost optimization and better control.

Built in Intelligence Without Domain Training

Traditional AI apps required massive labeled datasets to perform even moderately well in specialized areas. Also, LLMs come with broad general knowledge from pre training, allowing you to handle diverse user queries from day one.

- In the MVP stage, the means you can serve multiple industries without retraining.

- At scale, you can fine tune for specific vertices while still utilizing the model’s general capabilities.

Seamless Scaling Path

Scaling is more than just handling more users; it’s about improving performance and reliability without losing accuracy.

- Start with API based LLM access for speed.

- Migrate to fine tuned or hybrid models as traffic grows.

- Integrate with vector databases and retrieval augmented generation for better domain accuracy.

Lower Barrier to Innovation

LLMs level the playing field for smaller teams. What was once possible for tech giants is now achievable for startups and SMEs with limited resources.

- No need for specialized ML PhDs to get started.

- Access to modern capabilities instantly.

- Ability to experiment with features without long development cycles.

What is the AI Product Development Roadmap?

Define Your Use Case

Before investing in development, you must identify a specific, validated problem that an LLM can solve effectively. So, jumping into AI without this clarity risks building something impressive but irrelevant. Therefore, you should begin by understanding your target market and analyze industry pain points. The goal is to uncover issues that are frequent and time consuming for your audience.

Once you identify the pain point, evaluate whether an LLM is the right technology to address it. Moreover, LLMs excel in areas like text generation and conversational interaction. However, they can be less suitable for real time numerical predictions for tasks where accuracy must be exact.

Additionally, clearly identifying the use case entails mapping success measures. For instance, do you want to cut the response time for customer support by half? Or do you want 70% of reporting should be automated? Therefore, having measurable goals allows you to later validate if the AI is delivering real value.

Finally, analyze your competitors. Can you distinguish between comparable systems based on their speed or integration capabilities? You ought to have a precise issue definition and quantifiable success criteria by the time this phase is over.

Build the MVP

The next stage is to build the MVP. You ought to begin by giving one high impact feature top priority. Early adopters should be able to utilize your product without requiring extensive onboarding, and you should maintain a simple and user friendly design.

Additionally, you should incorporate guardrails even in this early phase. LLMs can sometimes produce irrelevant and inappropriate responses. Therefore, you should add content filtering and prompt structures that guide the model toward useful outputs. Also, monitor API latency and response consistency, as slow or unpredictable results can turn early users away.

Lastly, you should implement tracking. Track usage data and feature adoption rates. Moreover, tools like in-app surveys and error reporting can give you insights into what’s working and what’s not.

Iteration and Improvement

Once your MVP is in user’s hand, the focus shifts from launching to learning. So, you should start by analyzing user behavior. After this, you should use this insight to refine your prompt engineering so the model produces more accurate and context aware responses. Moreover, if you find recurring shortcomings, consider fine tuning the LLM with domain specific data. This could mean training the model on your company’s proprietary documents or verified knowledge bases.

Also, iteration is about performance and cost optimization. By caching frequent queries and batching requests, cheaper models dramatically lower operational costs. Additionally, this is the stage where you should carefully add features that enhance rather than detract from your primary use case.

Additionally, safety precautions should be continuously strengthened. The likelihood of abuse or unexpected results increases with the number of users. To preserve compliance and confidence, you should also use automatic output testing and more sophisticated content moderation.

Scale to a Full Product

Scaling is the stage where you change from a promising prototype to a reliable and enterprise ready solution. It’s not just about handling users; it’s about ensuring reliability and security. One of the first scaling steps is infrastructure optimization. While APIs work well for MVPs, heavy usage may require transitioning to hybrid or fully self hosted models to reduce latency and control costs.

Next, you should invest in model customization. Fine tune the LLM proprietary datasets for improved accuracy in your niche, and integrate RAG pipelines to ground responses in verified data sources.

Moreover, reliable monitoring systems are non negotiable at scale. Install real time dashboards that monitor user happiness and uptime as well. In order for your team to resolve problems before they affect consumers, you should also automate notifications.

Scaling means meeting enterprise grade requirements. This includes strong data encryption and the ability to pass security audits. As your product matures, partnerships and multi language support can also become part of your scaling strategy.

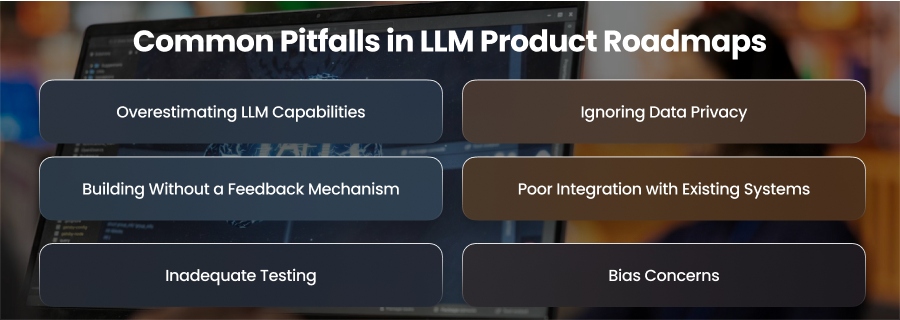

Common Pitfalls in LLM Product Roadmaps

Overestimating LLM Capabilities

Many teams start with the assumption that LLMs can deliver perfect, human like intelligence out of the box. In practice, these models include drawbacks including bias and a lack of information relevant to a certain subject. Unattainable goals and dissatisfaction might result from unrealistic expectations. Accurately defining the model’s parameters and, if required, including retrieval or fine tuning techniques are also crucial.

Ignoring Data Privacy

Inaccurate or inappropriate findings are frequently produced when general purpose LLMs are used exclusively without being modified for the particular domain. Skipping domain fine tuning can also limit competitive differentiation. Incorporating domain datasets and reinforcement learning feedback loops ensures the LLM meets specialized needs.

Building Without a Feedback Mechanism

An LLM product is never truly done; it changes with user interactions. Launching without a feedback loop for capturing real world data usage and user satisfaction can lead to stagnation. Moreover, regularly collecting and analyzing feedback ensures the model remains relevant and high performing.

Poor Integration with Existing Systems

LLMs rarely operate in isolation. Furthermore, neglecting to design for interaction with existing software infrastructure and APIs is a typical error. As a result, there may be bottlenecks or a bad user experience. Roadmaps should include integration architecture planning from day one.

Inadequate Testing

Skipping rigorous testing of the LLM’s outputs across different scenarios can lead to embarrassing failures in production. Teams should invest in automated evaluation frameworks and performance benchmarks to ensure consistent quality.

Bias Concerns

LLM goods may inadvertently reinforce negative preconceptions if bias and fairness are not addressed at an early stage. As a result, the roadmap should include responsible AI practices.

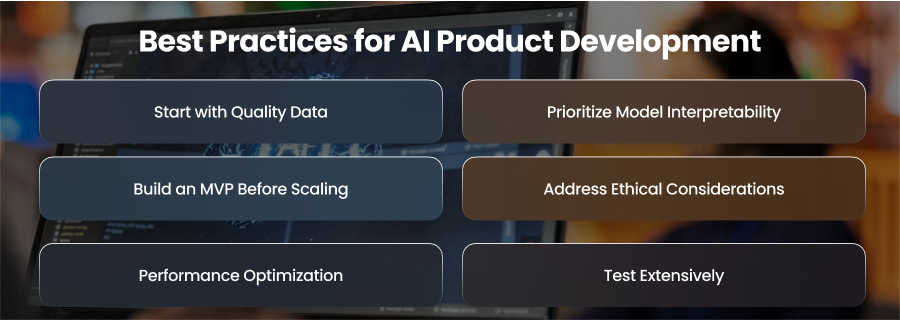

Best Practices for AI Product Development

Start with Quality Data

AI models only thrive on high quality data. Poor quality data can lead to inaccurate outputs and a loss of user trust. Additionally, spend effort gathering and classifying data prior to training the model. To make sure the model can handle a wide range of circumstances, try to include a variety of data sources.

Prioritize Model Interpretability

AI products should not be black boxes, especially in industries like healthcare or law. Therefore, providing clear explanations of how your model generates outputs builds trust with users and regulators. Using interpretable models helps teams detect issues early and increases adoption.

Build an MVP Before Scaling

Steer clear of the urge to launch a massive AI deployment right now. Before making significant financial commitments, you may test and improve your main concept by creating an MVP. This method allows for quicker learning cycles and reduces waste.

Address Ethical Considerations

When developing AI, ethics must be an afterthought. Determine any possible prejudice and privacy problems right away. Also, you should implement safeguards and compliance with AI governance frameworks. Hence, this not only protects users but also shields your brand from reputational damage.

Performance Optimization

Your AI solution must be able to manage growing workloads without sacrificing performance as it becomes more popular. This calls for horizontally scalable infrastructure architecture and inference speed optimization. Also, cloud solutions and containerized deployments often help achieve these goals.

Test Extensively

Models can behave differently in controlled lab settings compared to real world environments. Before full scale launch, test your AI solution under realistic conditions and monitor its performance against edge cases.

Final Word

Therefore, following best practices and creating clear roadmaps are necessary for creating effective AI products using LLMs. Aligning technology with user demands from MVP validation to widespread deployment guarantees long term growth and market relevance. Hence, this turns innovate ideas into impactful solutions.