Statista reports that the market size for NLP is expected to grow to a volume of $201.49 billion in the next six years. This illustrates how NLP is becoming more and more significant in AI and business applications. Even though Python is usually the language of choice for natural language processing, Java is still a fairly reliable choice.

Additionally, this is especially important for enterprise level applications where performance and system integration are critical.

So, in this guide, we will discuss the best Java frameworks for NLP and what to consider when selecting the right framework for your project.

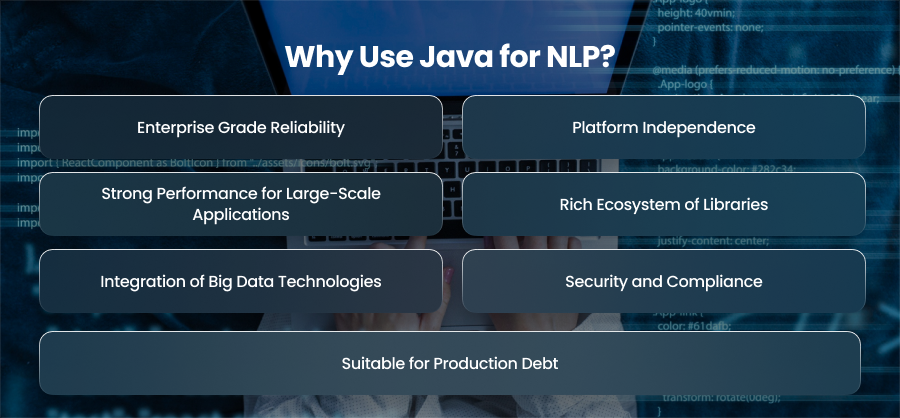

Why Use Java for NLP?

Enterprise Grade Reliability

Java has been the backbone of enterprise applications for decades. Java’s stability is essential for critical systems like healthcare platforms. Enterprises require a language that guarantees security and performance when developing NLP applications, such as fraud detection systems or medical record analysis.

Platform Independence

One of Java’s strongest advantages is its write once, run anywhere capability, powered by the Java Virtual Machine. NPL applications frequently need the use of many infrastructures and operating systems. Java makes it unnecessary for developers to rewrite code for multiple platforms, which makes deployment easier in a variety of scenarios.

Strong Performance for Large-Scale Applications

Large scale text processing requires a lot of resources. Java is ideally suited for managing high throughput NLP operations because of its effective memory management and multi threading capabilities. For instance, Java’s fast processing of millions of documents is advantageous for search engines.

Rich Ecosystem of Libraries

Numerous NLP specific libraries, such as Stanford NLP and Apache OpenNLP, as well as machine learning and big data frameworks, including Deeplearning4j, are available in Java. Developers may create end-to-end NLP pipelines with this environment.

Integration of Big Data Technologies

Analyzing large datasets, such as customer reviews or medical information, is a common task for modern NLP. Additionally, Java developers can easily interface with large data platforms such as Apache Hadoop, which facilitates the scaling of natural language processing solutions for batch processing. Businesses handling gigabytes of unstructured text will find this very helpful.

Security and Compliance

In critical industries like banking, where data security is essential, natural language processing is growing in popularity. Java’s built in security features make it a safer choice than certain other programming languages.

Suitable for Production

While Python dominates in research, Java strikes a balance between academic credibility and production readiness. Many research-grade frameworks, like Stanford NLP and CoreNLP, originated in Java, and their accuracy makes them popular in academia. A the same time, enterprises rely on Java for building production grade NLP applications due to its scalability and system integration capabilities.

Best 10 Java Frameworks for NLP

1. OpenNLP

Because of its adaptability, Apache OpenNLP is one of the most popular NLP frameworks for Java. Additionally, it supports basic NLP tasks including named entity identification, tokenization, and sentence detection. One of its advantages is that developers can train custom models, which makes it flexible for industry specific applications.

However, compared to more modern libraries, it has fewer pre-trained models and is somewhat limited in advanced tasks like deep semantic analysis. Still, it’s an excellent choice for chatbots and document classification where standard NLP functions as needed.

2. Stanford NLP

Stanford University developed the trustworthy NLP framework known as Stanford NLP. It is also renowned for its broad feature set, which includes sentiment analysis, dependency parsing, and tokenization, as well as its accuracy in language tasks. It is also suitable for apps that are utilized worldwide because it supports several languages.

Because of its bigger footprint and poorer processing efficiency when working with very large datasets, Stanford NLP is not as well suited for lightweight applications, despite the fact that it is highly accurate and widely utilized in research.

3. CogComp NLP

CogComp NLP offers a modular collection of NLP tools with machine learning at its core. Also, it includes support for entity recognition, semantic role labeling, and coreference resolution. Additionally, one of CogComp NLP’s main advantages is its ability to integrate with deep learning and machine learning methodologies, which increases its adaptability for contemporary AI driven applications.

Though it is more well-liked in academic circles than in general enterprise adoption, its learning curve is higher than that of simpler frameworks. For developers and academics who desire control over language tasks, this framework is ideal.

4. GATE

The General Architecture for Text Engineering, or GATE, is a comprehensive framework for NLP research and large-scale text engineering projects. In addition to its programmatic APIs, it has a graphical user interface that lets developers see and annotate text pipelines, which sets it distinct from other lightweight libraries.

It also has a strong ecosystem of plugins that improve its performance and make information extraction easier. Its biggest benefits are its scalability and adaptability, which make it suitable for processing text in massive amounts of data. GATE could seem heavier than other frameworks, nevertheless, and novices might find its setup too challenging.

5. LingPipe

A well established Java NLP toolkit, LingPipe is renowned for its dependability. It also provides features for identifying and categorizing named entities. One of its benefits is its capacity to manage large datasets well, which qualifies it for industries where productivity is essential. However, the environment of LingPipe is devoid of the comprehensive updates seen in contemporary libraries.

6. Deeplearning4j

Deeplearning4j is a Java based deep learning framework that extends NLP capabilities by supporting neural networks and word embeddings. Additionally, it enables developers to create sophisticated models for text categorization and sentiment analysis. It easily interfaces with big data frameworks such as Apache Spark, making large scale distributed natural language processing workloads possible. The framework is strong for computationally demanding applications because it also supports GPU acceleration. Furthermore, it’s best best suited for enterprises aiming to build AI driven NLP applications with deep learning as the backbone.

7. MALLET

MALLET is a Java-based package designed specifically for for statistical natural language processing and topic modeling. Its effective machine learning algorithm implementations make it popular in both academia and industry for applications like document categorization and topic discovery. MALLET is a popular tool for analyzing big text corpora because it can perform topic modeling using Latent Dirichlet Allocation, which is another one of its major characteristics. Compared to frameworks like OpenNLP, MALLET is less appropriate for simple preprocessing, even if it performs quite well in machine learning-driven NLP applications.

8. Apache Tika

Apache Tika is not a traditional NLP framework but plays a critical role in the NLP pipeline as a preprocessing tool. Its primary function is to extract text and metadata from various file formats, including PDFs, and multimedia files. Moreover, it also provides language detection capabilities.

By working alongside NLP libraries like CoreNLP, Tika enables developers to feed unstructured documents into NLP pipelines for further analysis. Its biggest strenght lies in its ability to handle diverse document format, making it indispensable for industries such as finance where structured text analysis begins with document extraction.

9. Apache Lucene

Search engines and text retrieval systems frequently employ Apache Lucene, a Java-based full-text search library with excellent speed. Even while Lucene is more than just an NLP system, it provides two essential text processing features: tokenization and indexing. Moreover, Lucene’s ability to efficiently handle enormous text quantities is highly advantageous for NLP applications that include search. While it lacks sophisticated features like sentiment analysis, it is unmatched in terms of developing scalable solutions.

10. Apache UIMA

A framework called Apache UIMA was created expressly to analyze vast amounts of unstructured data, including text and video. Additionally, enabling numerous annotators to collaborate on a single piece of data offers a scalable framework for creating intricate NLP pipelines. Additionally, UIMA offers interoperability across many components and connects effectively with big data systems, making it very helpful for organizations.

It has also been embraced by sectors such as legal services, where large scale processing and analysis of unstructured text is essential. The primary difficulty with UIMA, however, is its intricacy, since pipeline configuration and setup require a high level of skill.

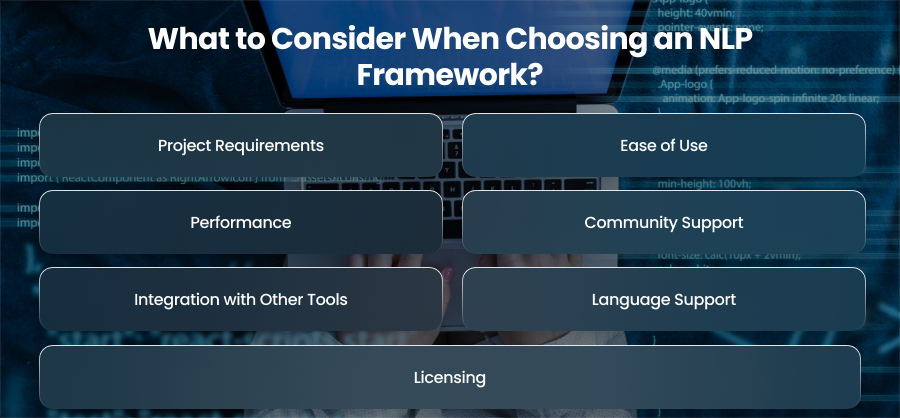

What to Consider When Choosing an NLP Framework?

Project Requirements

Clearly defining your project’s goals and needs is the first step in choosing an NLP framework. While some frameworks perform very well in intricate machine learning applications, others are more appropriate for relatively simple tasks like tokenization. For example, MALLET might be a good option if your project calls for topic modeling. However, if handling large volumes of unstructured text is your main issue, Apache UIMA could be a decent option.

Ease of Use

Not all frameworks are equally easy to learn and implement. Tools like OpenNLP come with relatively straightforward APIs and good documentation, making them beginner friendly. More advanced frameworks may require deeper technical knowledge and familiarity with machine learning models or system integration. So, when choosing, consider your team’s expertise level and how quickly you need to get your NLP solution up.

Performance

Performance is crucial, especially when working with real time applications. Frameworks like Apache Lucene can handle large text datasets efficiently and rapidly. UIMA is a well-liked choice for companies handling massive amounts of data since it is scalable.

Community Support

You may greatly lessen the development difficulties you may face by having a vibrant developer community and thorough documentation. Long standing frameworks like GATE benefit from sizable communities and frequent upgrades. Moreover, less known frameworks might lack this support, making troubleshooting more difficult. When possible, opt for frameworks backed by strong communities and continuous development.

Integration with Other Tools

Many NLP projects require integration with other systems, including databases or big data platforms. For example, Apache Tika interfaces with search and indexing tools like Solr with ease. Similar to Hadoop, Deeplearning4j is compatible with various distributed computing systems. Long term effort savings may be achieved by ensuring that the framework you have selected can interact with your current tech stack.

Language Support

While many frameworks prioritize English, some offer multilingual support to handle a wider variety of languages. For example, Stanford NLP provides models and tools for several languages, which is beneficial for global research initiatives and businesses. Furthermore, frameworks that provide modification provide the flexibility to modify models to meet domain specific requirements.

Licensing

Particularly for commercial uses, licensing issues are crucial. Although the majority of Java NLP frameworks are open source, their licenses differ; the more restrictive GPL based licenses range from the liberal Apache license. To guarantee adherence to your company’s principles and steer clear of any legal issues, always review the license conditions.

Final Words

A dependable ecosystem of NLP frameworks, each designed for specific tasks like parsing and extensive text analysis, is provided by Java. Developers may select the best framework by taking community support, scalability, and project requirements into account. Moreover, the right choice ensures accuracy and long term value in NLP applications.