According to a report by 6Sense, Docker has a market share of 87.76% in the containerization market. Moreover, IBM reports an 82.84% market share for Docker. These statistics reflect the growing adoption of Docker as the default containerization tool.

Docker gives developers the ability to create and execute programs in a variety of settings. Knowing Docker containers may therefore greatly improve your workflow, regardless of whether you’re a developer or a DevOps engineer.

So, in this CodingCops blog, we will explore everything you need to know about Docker containers. And, we’ll also share best practices to help you set up your Docker containers effectively.

Docker Containers

Docker containers are a sophisticated type of program virtualization that contains all configuration files and dependencies needed to execute applications consistently. Moreover, unlike typical virtual machines that rely on extensive operating system virtualization, Docker shares the host OS kernel while keeping segregated user spaces.

Hence, this design makes containers lightweight and resource efficient. Moreover, they offer portability, thus allowing applications to run seamlessly without any system that supports Docker, whether it’s a staging environment or a production server in the cloud.

Docker achieves this through its powerful Docker Engine. Additionally, a Docker image, a preset snapshot specified by a Dockerfile, is used to build each container. Hence, this enables developers to version and deploy applications with unprecedented consistency and control.

Additionally, containers offer robust filesystem and process separation, which improves multi-tenant systems’ security and dependability.

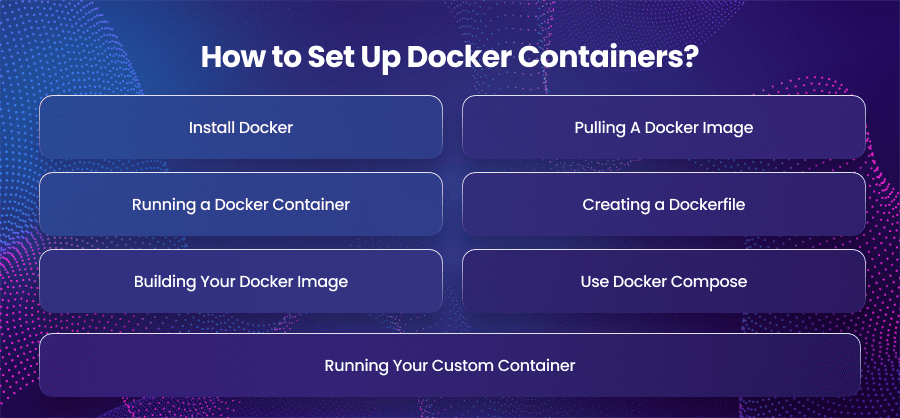

How to Set Up Docker Containers?

Install Docker

Installing Docker is the initial step in using Docker containers. Because it is compatible with both Windows and macOS, installing it on your device is simple. It comes with everything you need to get started with Docker and is also available for Linux.

Pulling A Docker Image

After installing Docker, you can start by pulling a base image from Docker Hub. It’s Docker’s public repository for images. Moreover, these images act as blueprints for your containers. This contains everything needed to run a particular piece of software, including the OS to runtime, and libraries.

Running a Docker Container

After downloading the image, you have to run a container from it. Additionally, this creates a fresh instance of the image and provides you with a virtual machine-like environment. This container is also perfect for testing and development because it can be accessed from the command line.

Creating a Dockerfile

Writing a Dockerfile is required if you wish to create a customized environment. A collection of instructions that Docker uses to create a new image will be included in this file. You may choose which dependencies to install and which base image to use, for instance. Moreover, you can choose which command to run when the container starts.

Building Your Docker Image

You use your Dockerfile to create your own Docker image after building it. Everything specified in the Dockerfile is compiled during this process into a single image that is ready for deployment and distribution.

Running Your Custom Container

After building your image, you can launch a container using it. You can map ports between your container and the host machine. This is especially useful when your application serves web content or APIs.

Use Docker Compose

Applications that require many services, such a web application that also requires a database, require Docker Compose. Additionally, it enables you to create and manage container apps using only one YAML file. Therefore, you can use a single command to launch the whole environment once your docker-compose.yml file is complete. Orchestration becomes easy and reproducible as a result.

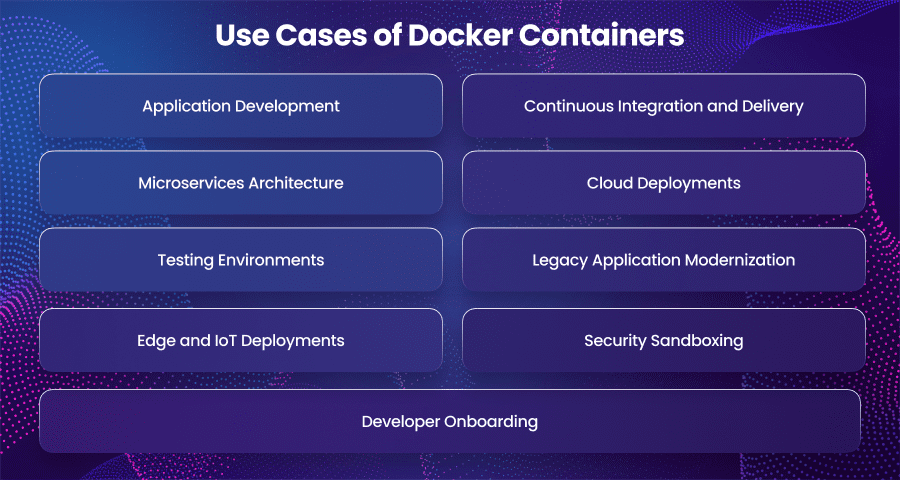

Use Cases of Docker Containers

Application Development

Docker has changed development workflows by offering isolated and reproducible environments. Hence, developers no longer have to worry about applications not working on other machines. With Dockerfiles and Compose files, the development environment can be versioned controlled and shared across teams.

Hence, this ensures that every developer is working in the same setup. This eliminates inconsistencies caused by OS versions or conflicting libraries.

Continuous Integration and Delivery

Another use case of Docker is in modern development pipelines. Additionally, containers guarantee that the same environment is used for the development and production of your code. Furthermore, technologies like Jenkins and GitHub Actions employ Docker containers to develop and test apps fast and consistently. You can package your app and deploy it with confidence. This reduces deployment failures and simplifies rollback strategies.

Microservices Architecture

In a microservices setup, each service is a unit without its own runtime and configurations. Moreover, Docker makes it easy to encapsulate each microservice in its container. This simplifies deployment and scaling. Furthermore, containers also make it easy to replace or remove services independently.

Cloud Deployments

Cloud apps are a perfect fit for Docker. Additionally, cloud platforms like AWS and Google Cloud natively support Docker containers. This enables you to use services like Azure Container Instances and AWS Fargate to deploy programs. Docker makes it simple to deploy hybrid cloud configurations and transfer workloads between cloud providers. Hence, this can give your team flexibility and vendor independence.

Testing Environments

You can also use Docker for building disposable environments for testing or learning new technologies. Moreover, you can use it to try out a new version of PostgreSQL or a new machine learning model framework. Hence, with Docker containers, you don’t have to clutter your main OS or manage conflicting software versions.

Legacy Application Modernization

Docker can help containerize older applications. This helps in making them easier to maintain and deploy. Moreover, instead of rewriting everything from scratch you can wrap legacy software in containers and extend it with APIs. Hence, you can gradually transition to newer architectures while preserving existing functionality.

Edge and IoT Deployments

Docker isn’t limited to the cloud, containers are increasingly used at the edge for IoT applications. Lightweight and efficient, Docker containers can run on small devices like Raspberry Pi. This enables real time data processing and decision making closer to the source without constant cloud interaction.

Security Sandboxing

Containers are a useful way to isolate and analyze untrusted code. Moreover, researchers and security professionals often use Docker to create sandbox environments for malware analysis and incident response. This minimizes risk to their systems.

Developer Onboarding

New team members can hit the ground running with Docker. Instead of spending hours setting up environments, developers can just clone a repo and run docker-compose up to start coding immediately in a pre-configured and production environment.

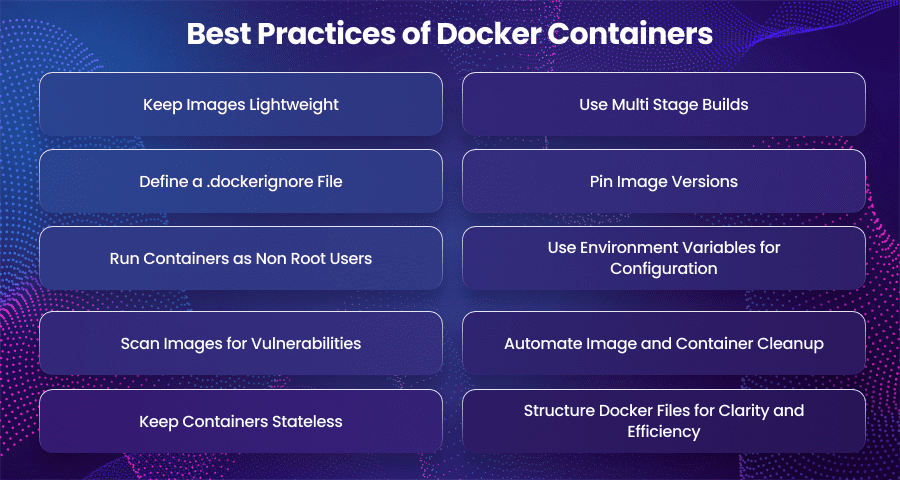

Best Practices of Docker Containers

Keep Images Lightweight

Using minimal base images such as Alpine Linux which reduces the overall size of your container. This can speed up build and deployment times. Moreover, you should avoid adding unnecessary packages or tools inside the container unless absolutely needed. Furthermore, smaller images are easier to maintain and more secure.

Use Multi Stage Builds

Moreover, multi stage builds are an effective way to create lean containers. By separating the build environment from the final runtime image, you can compile code and install dependencies in one stage. After that, you can then copy only the necessary artifacts into the final image. Hence, this can keep your production image clean and efficient.

Define a .dockerignore File

Similar to.gitignore for Git,.dockerignore stops specific files and folders from being included to your image, including documentation and build logs. This also lessens the possibility of sensitive data leaking and the size of the picture.

Pin Image Versions

You should avoid using generic image tags like latest in production. This can lead to unexpected behavior when base images are updated. Instead, specify exact versions of your base images to ensure consistency across environments and deployments.

Run Containers as Non Root Users

Docker containers operate with root privileges by default, which poses a serious security concern. Defining and switching to a non-root user inside your Dockerfile is advised. This procedure improves security and reduces possible harm in the event of a compromise.

Use Environment Variables for Configuration

Instead of hardcoding environment values directly into your application or Dockerfile, use environment variables. This allows your containers to be more portable and flexible, easily adapting to different deployment environments like development and production environments.

Scan Images for Vulnerabilities

Security is important in containerized environments. You should regularly use tools like Docker Scout to examine your Docker images in order to identify and fix known problems. You should incorporate these scans into your development process to stop vulnerable code from making it to production.

Automate Image and Container Cleanup

Unused images and containers may build up and occupy disk space over time. Therefore, you should arrange your CI tools to clear away artifacts after builds or set up automatic cleanup procedures using commands like docker system prune. Hence, this can keep your host lean and manageable.

Keep Containers Stateless

You should keep containers stateless and immutable whenever possible. Additionally, instead of depending solely on data within the container, you should use external databases or Docker volumes to store permanent data. This guarantees that data loss won’t occur when containers are deleted and reconstituted at any moment.

Structure Docker Files for Clarity and Efficiency

Moreover, you should keep your Dockerfiles clean. Group related commands together and minimize the number of layers. Moreover, this not only improves build performance but also makes your setup easier for others to understand and maintain.

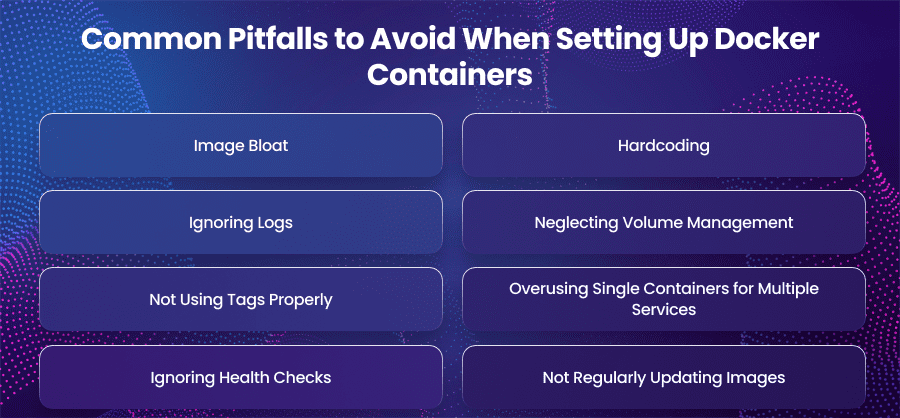

Common Pitfalls to Avoid When Setting Up Docker Containers

Image Bloat

If you install unnecessary packages or use large base images can lead to bloated containers. Moreover, this increases build time and storage usage. So, you should use minimal base images like alpine and clean up unnecessary files.

Hardcoding

You can make your Docker environments vulnerable if you store sensitive data like passwords or database credentials directly into Dockerfiles or environment variableses. Moreover, you can use management solutions like Docker Secrets.

Ignoring Logs

Containers can run without exposing or persistent logs. This can make debugging a nightmare when issues arise. So, you can configure logging drivers or integrate with log aggregation tools like ELK Stack or Splunk. Moreover, logs are redirected to stdout for compatibility with most orchestration tools.

Neglecting Volume Management

Improper volume setup can cause permission issues or accidental data loss. This is especially true when containers are rebuilt or deleted. So, clearly define volumes in docker-compose.yml. Moreover, use named volumes or bind mounts with proper user permissions, and backup persistent data regularly.

Not Using Tags Properly

If you rely on the latest tag, then it can introduce inconsistencies across environments, especially when the underlying image changes without notice. So, always pin images to a specific and tested version. This ensures reproducibility and reduces risk in development pipelines.

Overusing Single Containers for Multiple Services

If you pack multiple services into a single container breaks the microservices philosophy and complicates scaling and maintenance. So, you should use Docker Compose or Kubernetes to manage multi container applications.

Ignoring Health Checks

Without proper health checks, orchestration tools cannot detect and recover from application failures. So, use the HEALTHCHECK instruction in your Dockerfile or define health checks in your Compose or Kubernetes configs. This helps ensure resilience and uptime.

Not Regularly Updating Images

Your containers can grow susceptible to known security flaws if you use out-of-date base images. As a result, you should use programs like Docker Scout to automate image scanning and set up a regular process for upgrading and rebuilding images to include security fixes.

Final Words

Docker containers have changed program development and scalability. Teams of developers and operations can work together more efficiently if separated and repeatable environments can be created.