While traveling through the metro, I listened to two people gossiping about how companies and enterprises manage their complex workflows and big data pipelines across various environments. They continued their conversation and I got a new topic for you guys.

So, today CodingCops is here to inform you about managing complex workflows and massive data. Let us take you to the world of Apache Airflow, a powerful, open-source tool that manages or arranges the big air flows.

Whether you are dealing with intricate ETL processes, automating machine learning pipelines, or managing cloud infrastructure, Apache Airflow is there to rescue you. It offers scalability and flexibility that helps you handle such chaos easily.

But how can you ensure that you are getting the maximum from Airflow? Don’t worry, CodingCops will take you through the whole process. You will get key use cases, architectural insights, expert tips, and FAQs, that will help you optimize Apache Airflow with maximum efficiency.

Understanding Apache Airflow

Apache Airflow is a free software tool and has been widely adopted by the data engineering community to write, schedule, and monitor data pipelines. It manages the computation of data streams and creates batch-processing paradigms.

It is a difficult-to-understand, but highly customizable tool that is built using standard Python features.

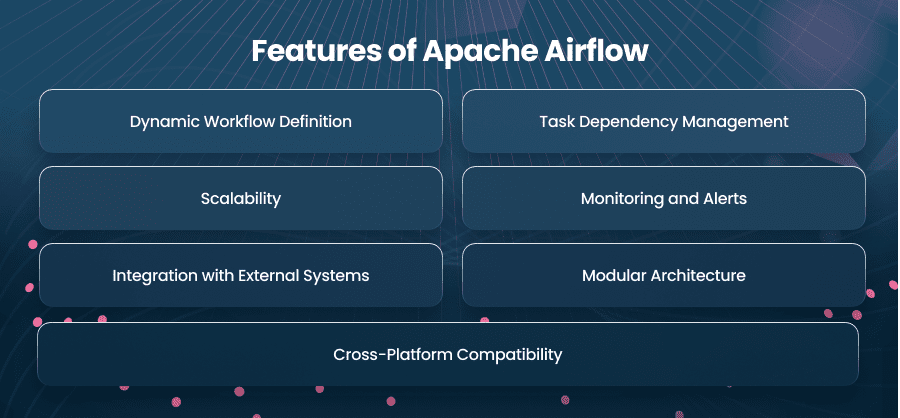

Features of Apache Airflow

Dynamic Workflow Definition

Workflows are defined as Directed Acyclic Graphs using Python code, allowing for dynamic, programmatic workflows with loops, conditional logic, and parameters.

Task Dependency Management

Airflow automatically manages task dependencies, ensuring that tasks are executed in the correct order based on the DAG structure.

Scalability

Provides distributed task execution using executors like CeleryExecutor and KubernetesExecutor, enabling it to handle workflows of any size.

Monitoring and Alerts

Features a web-based UI to monitor workflows, check task statuses, view logs, and trigger tasks manually.

Built-in alerting system notifies users of task failures or SLA breaches via email or other notification channels.

Integration with External Systems

Seamlessly integrates with data processing tools, cloud platforms, databases, and DevOps tools like Docker and Kubernetes.

Modular Architecture

Separates components like the scheduler, executor, metadata database, and workers, providing flexibility and ease of scaling.

Cross-Platform Compatibility

Apache Airflow furnishes compatibility with various operating systems and can run on-premises or in cloud environments.

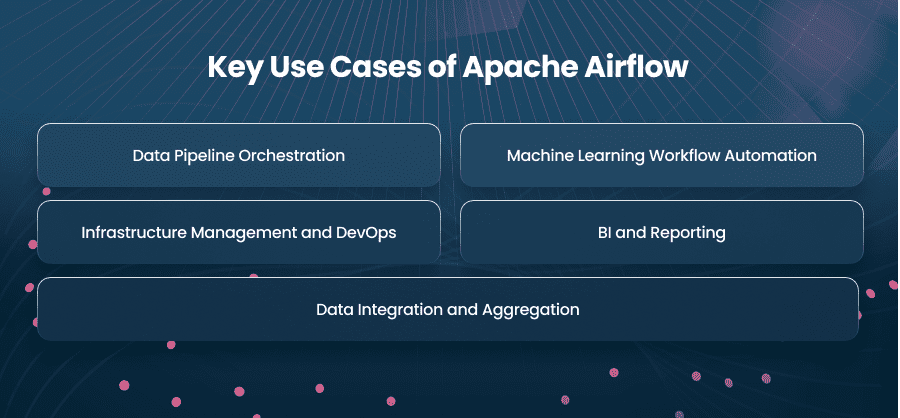

Key Use Cases of Apache Airflow

Apache Airflow can be used in many fields since it is a general platform. Here are some prominent use cases:

1. Data Pipeline Orchestration

ETL and ELT tasks are good with airflow. It is a crucial process in the extraction of data from other sources, transforming the data into a format that is appropriate for the business, and loading the data into the target systems.

This automation helps to check that the information fed into the system is accurate to the same level.

Example: A retail firm shall employ Airflow to extract an element of sales from other databases of several regions, transform the extracted data into a format that is coherent to the rest, and then load it into a data store for processing.

2. Machine Learning Workflow Automation

Airflow performs tasks like data preprocessing, model training, evaluation, and model deployment using machine learning. Its scheduling feature guarantees the timely accomplishment of these tasks, which makes it possible to constantly update and deploy the machine learning models.

Example: A financial organization or company utilizes Airflow for automating the training of fraud detection models. It also ensures that the model is updated with the latest transactions daily.

3. Infrastructure Management and DevOps

Airflow is used in the DevOps process to help automate tasks such as infrastructure creation, configuration, and monitoring. These tasks enable organizations to get consistent and repeatable infrastructure deployments, by defining them as DAGs.

Example: A tech company can use Airflow to automate the deployment of their microservices architecture., ensuring each service is configured and deployed in the right sequence.

4. BI and Reporting

Airflow schedules the process of data extraction, transformation, and report creation and then distributes the reports. This automation assists stakeholders to get the timely and accurate information they need.

Example: A marketing firm that utilizes Airflow in producing weekly reports of the campaign, and data is collected from different campaign platforms, analyzed, and then delivered to the clients.

5. Data Integration and Aggregation

With Apache Airflow, you can easily integrate data from multiple sources while ensuring to have a unified view for analysis. This integration helps you manage the scheduling and execution of data integration tasks, handling dependencies, and retries efficiently.

Example: A hospital, medical center, or healthcare organization can implement Airflow to integrate the patient data from electronic health records, and billing systems into a unified patient profile.

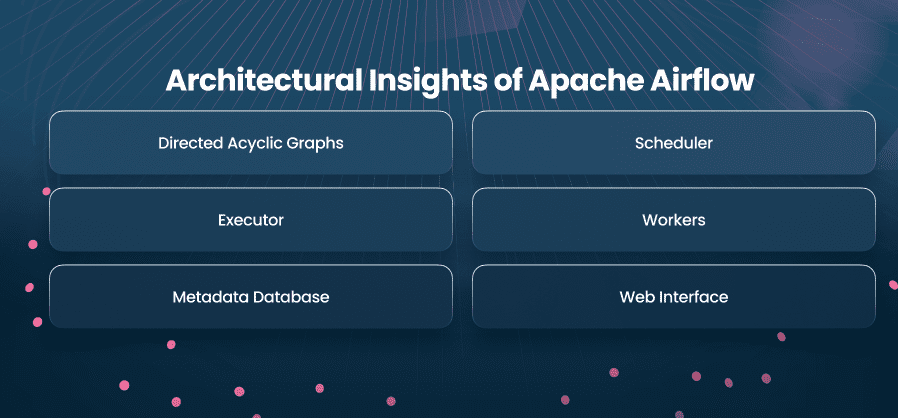

Architectural Insights of Apache Airflow

Understanding Airflow’s architecture is crucial for effective implementation and optimization. The primary components include:

1. Directed Acyclic Graphs

DAGs are the core of Airflow, representing workflows as a collection of tasks with defined dependencies. Each DAG is a Python script that defines the sequence and execution logic of tasks.

Key Points of DAGs

- Dynamic Generation: DAGs are generated dynamically, allowing for complex logic and conditional task execution.

- Modularity: Tasks within DAGs can be modular, promoting reusability and maintainability.

2. Scheduler

The scheduler is responsible for parsing DAGs and scheduling tasks for execution. It determines the order and timing of task execution based on dependencies and schedules defined in the DAGs.

Key Points of Scheduler

- Concurrency Management: The scheduler manages task concurrency, ensuring optimal resource utilization.

- Fault Tolerance: It handles task retries and failures, maintaining the integrity of workflows.

3. Executor

Executors are the mechanism by which task instances get run. Airflow supports various executors, including:

SequentialExecutor: Executes tasks sequentially, suitable for testing and debugging.

LocalExecutor: Allows parallel task execution on a single machine.

CeleryExecutor: Enables distributed task execution across multiple worker nodes.

KubernetesExecutor: Leverages Kubernetes to run tasks in separate pods, providing scalability and isolation.

Key Points of Executor

- Executor Choice: Selecting the appropriate executor is crucial for performance and scalability.

- Resource Management: Executors manage the allocation of resources for task execution.

4. Workers

Workers are the entities that execute the tasks assigned by the executor. In a distributed setup, multiple workers can run in parallel, enhancing scalability.

Key Points of Wokers

- Scalability: Adding more workers can handle increased workloads.

- Isolation: Workers can be isolated to run specific tasks, improving security and resource management.

5. Metadata Database

Airflow uses a metadata database to store information about DAGs, task instances, and their states. This database is central to Airflow’s operation, enabling tracking and monitoring of workflows.

Key Points of Metadata Database

- Persistence: The database ensures the persistence of workflow states across restarts.

- Monitoring: It provides insights into task durations, failures, and overall system health.

6. Web Interface

Airflow’s web interface offers a user-friendly platform to monitor and manage workflows. Users can view DAGs, track task progress, and access logs through this interface.

Key Points of Web Interface

- Visualization: Provides graphical representations of DAGs and task statuses.

- Interactivity: Allows users to trigger tasks, mark them as successful or failed, and clear task instances.

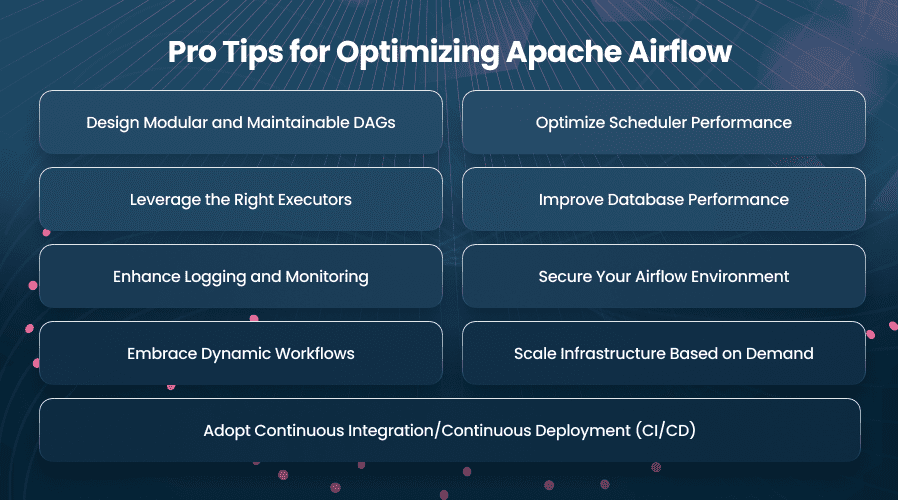

Pro Tips for Optimizing Apache Airflow

To harness the full potential of Apache Airflow, consider the following optimization strategies

1. Design Modular and Maintainable DAGs

To enhance the readability and efficiency of your workflows:

- Task Grouping: Use TaskGroups to logically group related tasks, making complex workflows more understandable.

- Avoid Overloading DAGs: Break down large workflows into smaller, modular DAGs that handle specific tasks. This reduces complexity and minimizes the impact of failures.

- Parameterization: Make your DAGs reusable by using parameters for tasks that need slight variations.

2. Optimize Scheduler Performance

The scheduler is pivotal for task execution, and optimizing it can significantly improve performance:

- Increase Parallelism: Adjust the parallelism setting in airflow.cfg to allow more concurrent tasks.

- Optimize Task Concurrency: Limit the number of concurrent tasks a DAG or task can execute with max_active_tasks and max_active_runs settings.

- Log Monitoring: Regularly monitor scheduler logs to identify bottlenecks or errors affecting task scheduling.

3. Leverage the Right Executors

Choosing the correct executor based on your workload is critical:

- For Small Workloads: Use LocalExecutor for single-machine environments.

- For Distributed Workflows: Opt for CeleryExecutor to distribute tasks across multiple worker nodes.

- For Containerized Workflows: Use KubernetesExecutor for dynamic, isolated execution in containerized environments.

4. Improve Database Performance

Since Airflow relies heavily on the metadata database:

- Database Scaling: Use a robust database like PostgreSQL or MySQL with optimized configurations.

- Regular Maintenance: Clean up old DAG runs and task instances using Airflow’s database cleanup tools to reduce clutter.

- Indexing: Ensure proper indexing of tables to improve query performance.

5. Enhance Logging and Monitoring

Effective logging and monitoring can streamline debugging and improve reliability:

- Centralized Logging: Integrate with tools like ELK Stack, Splunk, or AWS CloudWatch for centralized log management.

- Task Alerts: Set up alerts for task failures, SLA misses, or long runtimes. This enables faster incident resolution.

- Custom Metrics: Track custom metrics using monitoring tools like Prometheus or Grafana.

6. Secure Your Airflow Environment

Security is vital, especially in production environments:

- Authentication and Authorization: RBAC must be employed and should be compatible with authentication services such as LDAP or OAuth.

- Encryption: Encode as many items as possible especially passwords, API keys, and other sensitive database credentials.

- Network Restrictions: Use VPCs and restrict access to the Airflow web server and workers.

7. Adopt Continuous Integration/Continuous Deployment (CI/CD)

Streamline DAG deployment with modern development practices:

- Version Control: Store all DAGs in a Git repository to track changes and collaborate effectively.

- Automated Testing: Test DAGs for errors, dependencies, and task execution in staging environments before deploying to production.

- Deployment Pipelines: Use tools like Jenkins or GitHub Actions to automate DAG deployment and updates.

8. Embrace Dynamic Workflows

Take advantage of Airflow’s Pythonic nature to create flexible workflows:

- Dynamic Task Creation: Generate tasks dynamically based on inputs or configurations.

- Branching and Conditional Execution: Use BranchPythonOperator to define conditional workflows that adapt to runtime parameters.

9. Scale Infrastructure Based on Demand

As workflows and data volumes grow, ensure your infrastructure scales:

- Horizontal Scaling: Add more worker nodes for distributed task execution.

- Cloud Integration: Use cloud-based services like AWS ECS or GCP to handle scaling automatically.

- Resource Monitoring: Continuously monitor CPU, memory, and disk usage to optimize resource allocation.

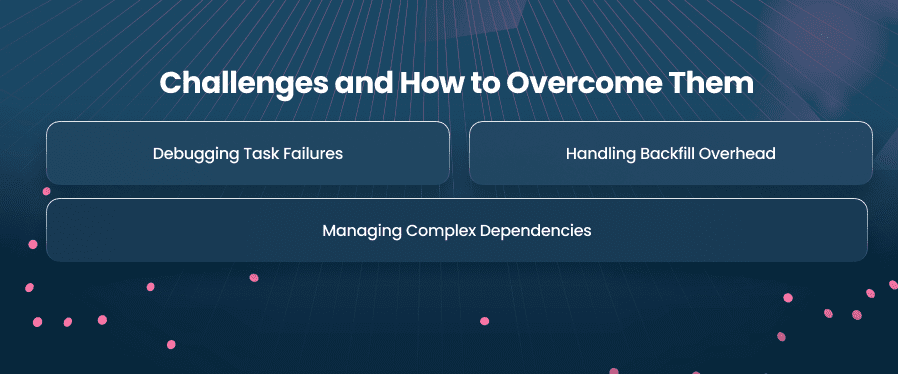

Challenges and How to Overcome Them

1. Debugging Task Failures

In Apache Airflow, you may encounter debugging task failures. For this, use detailed logs that are available via the Airflow UI. Moreover, you can benefit from task retries and notify operators of repeated failures.

2. Handling Backfill Overhead

For this, you can optimize catch-up settings for high-frequency directed acyclic graphs. Further, use external triggers instead of backfilling to rerun specific tasks.

3. Managing Complex Dependencies

Management of complex dependencies is a challenge that you face in Airflow. You can get rid of it by using task groups to visually and logically organize related tasks. Another thing to do is to avoid excessive chaining of tasks to prevent execution delays.

Conclusion

Apache Airflow is an indispensable tool for modern workflow orchestration, capable of transforming how businesses manage and automate processes. By leveraging its rich architecture, adopting best practices, and addressing common challenges, teams can achieve optimized performance and scalability. Airflow continues to evolve with active community support, making it a top choice for data-driven enterprises.