According to research, 63% of organizations are looking to adopt AI globally within the next three years. This is because AI has become an integral part of modern businesses. Moreover, LLMs like GPT have opened doors to highly capable applications, from chatbots and content generation to medical research and financial forecasting.

However, no general purpose model can perfectly fit every use case. Businesses often need to customize these models for specific domains. Hence, this is where fine tuning and prompt tuning come into play.

Both are strategies for adapting pre trained models, but they differ in cost and performance. Moreover, fine tuning involves retraining a model’s weights with specialized data, while prompt tuning modifies the inputs or adds small trainable parameters to guide model behavior.

In this guide, we will discuss both approaches in depth and compare their strengths and weaknesses.

Fine Tuning

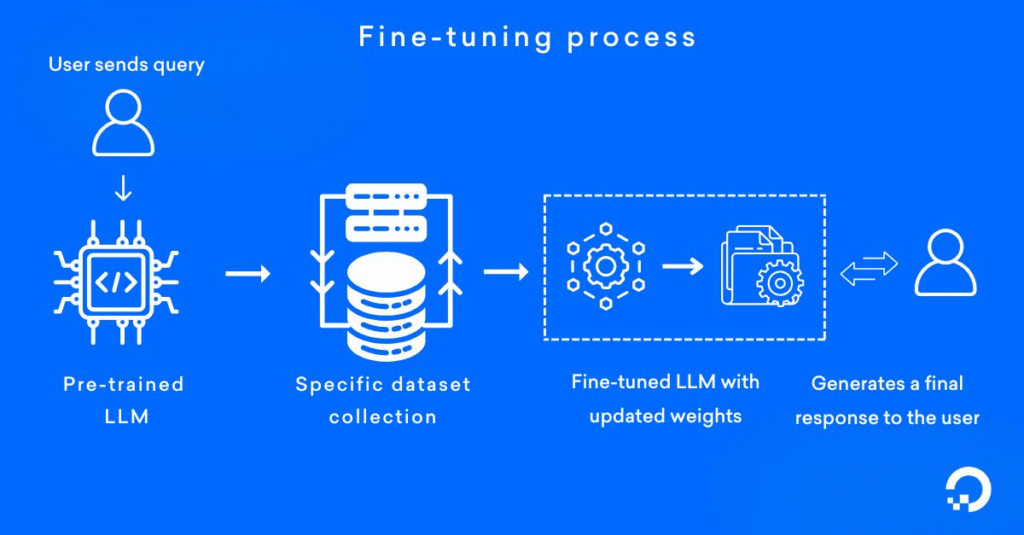

Fine tuning refers to retraining an already pre trained model on a more specific dataset to improve its performance in a targeted domain or task. Instead of starting from scratch, developers utilize the general intelligence of an LLM and then adapt it for specialized scenarios.

For example, imagine using a general purpose LLM for medical diagnosis. While the base model understands natural language, it might not perform well with clinical notes or patient data. By fine tuning it on medical datasets, the model learns to interpret terminology and diagnostic patterns more accurately.

Fine tuning also involves updating a large number of the model’s internal parameters, which can range from millions to billions. As a result, the model not only keeps domain specific data but also modifies its reasoning to perform better in that circumstance.

Advantages of Fine-Tuning

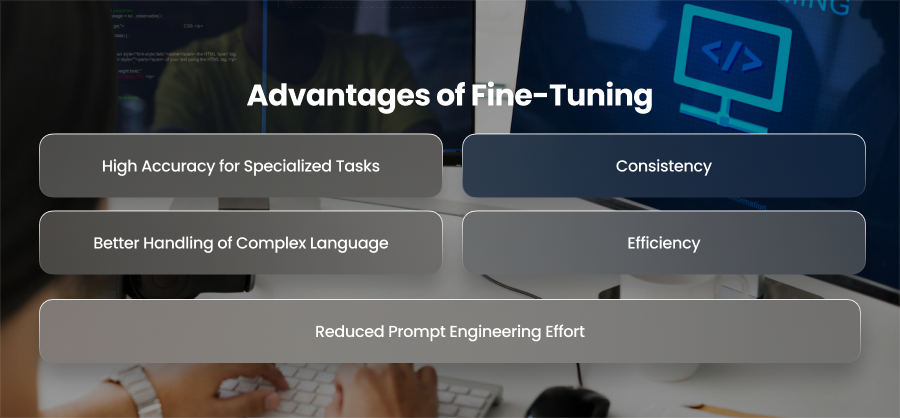

High Accuracy for Specialized Tasks

The primary strength of fine tuning is its ability to deliver domain specific accuracy. A model learns the context and subtleties of that industry when it is retrained on carefully chosen data. For instance, a law company can fine-tune a model to summarize long legal papers, while a healthcare provider can use it to understand clinical notes and patient histories.

Reduced Prompt Engineering Effort

Without fine tuning, users often need to carefully design long, complex prompts to get the right output. Fine tuning reduces this burden by making the model inherently familiar with the task at hand. For example, a well tuned customer service bot will know how to communicate effectively and won’t require complex instructions to answer in a kind and helpful way. This ensures a more seamless user experience and saves developers time.

Consistency

Fine tuned models offer greater stability in responses. Instead of producing a range of outputs for the same input, a well tuned system yields more reliable and consistent results. Furthermore, this is particularly useful in domains where consistency is essential, such as technical assistance documents.

Better Handling of Complex Language

Specialized fields often use highly technical jargon that general purpose LLMs may not fully grasp. Moreover, fine tuning helps models master complex language patterns, enabling them to generate or interpret domain specific text with precision. For example, in engineering, a fine tuned model can distinguish between tensile strength and yield strength and apply them correctly in different contexts.

Efficiency

Although fine tuning requires significant upfront investment, it pays off in the long run. Once fine tuned, the model reduces dependency on external workarounds like prompt design or excessive post processing. Moreover, this leads to long term cost savings and operational efficiency. Moreover, businesses that own fine tuned models gain a competitive advantage.

Limitations of Fine-Tuning

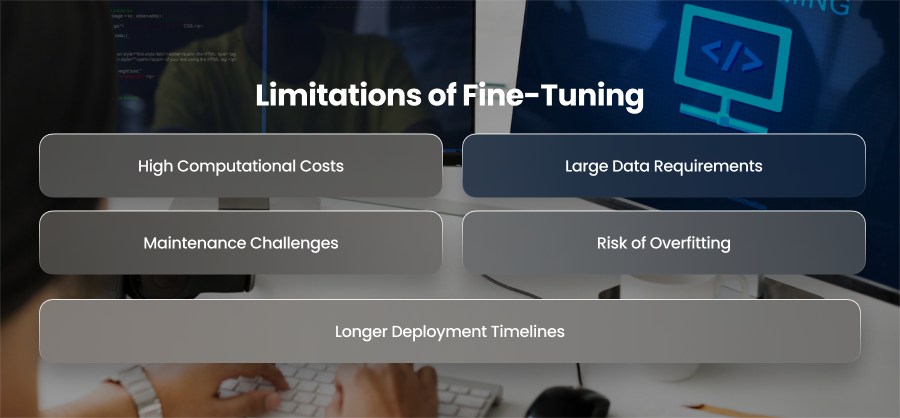

High Computational Costs

Fine tuning involves retraining a model with billions of parameters. This requires powerful GPUs and extensive compute time. For small and mid sized companies, this can lead to skyrocketing infrastructure expenses. Even after training, fine tuned models demand significant storage and processing power for deployment.

Large Data Requirements

To achieve reliable results, fine tuning typically requires vast amounts of high quality and domain specific data. Gathering such datasets is not only challenging but also expensive. For example, a healthcare provider may need thousands of patient records to fine tune a diagnostic model. Also, without sufficient data, fine tuned models can fail to generalize and underperform in real world applications.

Maintenance Challenges

Laws changes in industries. Therefore, each time new information becomes available, a fine tuned model may need retraining from scratch or partial retraining, which again consumes resources. Hence, this makes fine tuning a less agile solution for dynamic domains.

Risk of Overfitting

Overfitting happens when a model loses its capacity to handle unknown inputs because it is too closely matched to its training data. Furthermore, if the dataset is too tiny or undiversified, fine tuned models are especially susceptible. For example, a fine tuned financial AI agent may perform well with U.S. tax codes but fail to adapt to international regulations because its training data was too narrow.

Longer Deployment Timelines

Compared to prompt tuning, fine tuning adds significant time to market delays. Moreover, the process includes dataset collection and data cleaning, each of which can take weeks or months. Moreover, for businesses that need quick prototyping or want to stay ahead in fast moving industries.

Prompt Tuning

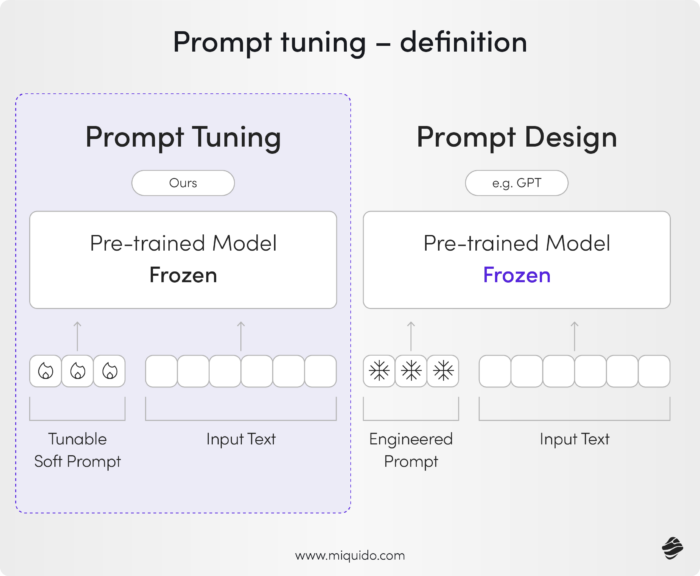

Prompt tuning is a lightweight alternative to fine tuning that focuses on optimizing the inputs to a model rather than altering its underlying parameters. Instead of retraining billions of weights, prompt tuning modifies the way instructions are framed or adds small trainable embeddings that steer the model’s behavior toward a specific task. Hence, this makes it more resource friendly and accessible to organizations with limited AI infrastructure.

Moreover, prompt tuning involves carefully crafting the text prompts fed into a model. So, by being explicit, the model’s output becomes more aligned with the user’s expectations.

Furthermore, a more advanced approach involves training small sets of additional parameters that are prepended to every input. Moreover, these learned vectors act as a guiding context for the model, improving performance on domain specific tasks without touching the core model weights. Unlike fine tuning, which might alter billions of parameters, soft prompt tuning typically adjusts only a few thousand, making it highly efficient.

Advantages of Prompt Tuning

Cost effective Customization

Prompt tuning focuses only on modifying small sets of prompt embeddings or refining textual instructions. Additionally, this significantly lowers storage and processing expenses. This implies that startups and mid sized companies may implement tailored AI solutions without requiring costly equipment or cloud infrastructure. For example, a small marketing agency can prompt tune a base LLM to generate ad copy for multiple industries.

Deployment and Iteration

Prompt tuning enables teams to prototype and deploy AI solutions quickly, sometimes within hours or days. Since adjustments can be made by simply refining prompts, businesses can experiment with different use cases. For instance, a retail company could quickly adapt its chatbot for a holiday campaign by tuning prompts instead of launching a long fine tuning project.

Data Efficiency

Prompt tuning typically requires far less data than fine tuning. Instead of needing millions of domain specific examples, a smaller curated dataset can be enough to teach the model the desired behavior. Hence, this makes it particularly valuable for industries where data is scarce or expensive to acquire, such as healthcare or law, where proprietary case data may not be publicly available.

Multi Domain Flexibility

One of the most powerful advantages of prompt tuning is the ability to use a single model across multiple domains. Moreover, different prompts or soft embeddings can tailor the model for customer service or content summarization, without needing separate fine tuned models for each. This is ideal for enterprises serving diverse markets. For example, a SaaS company can provide customized AI support to clients in finance and education using the same base model but swapping in domain specific prompts.

Lower Technical Barriers

Because prompt tuning often relies on well crafted textual instructions or small scale training of embeddings, it requires machine learning expertise compared to full fine tuning. Moreover, teams with limited AI backgrounds can still make meaningful adaptations using prompt engineering tools and libraries.

Limitations of Prompt Tuning

Limited Depth of Customization

Instead of changing the underlying parameters of a model, prompt tuning focuses on improving cues to direct its behavior. Furthermore, this method works well for surface level tweaks but is unable to significantly alter the model’s underlying information processing. As a result, industries requiring domain specific reasoning may find that prompt tuning falls short compared to fine tuning.

Performance Plateau in Complex Tasks

For simpler tasks like text classification or summarization, prompt tuning can perform quite well. However, in highly specialized use cases, the performance improvements plateau quickly. Moreover, the model may struggle to achieve the high levels of precision or accuracy required in these contexts without fine tuning its internal weights.

Dependence on Model’s Pre Trained Knowledge

Prompt tuning relies heavily on the base model’s pre trained capabilities. If the original model has not been exposed to certain niche concepts or data, prompt tuning alone cannot fill that gap. For example, if the model has minimal exposure to technical jargon in petroleum engineering, no amount of prompt optimization will yield fully reliable results.

Risk of Prompt Fragility

Prompt tuned models can sometimes be overly sensitive to small changes in input phrasing. A slight variation in wording or sentence structure may drastically alter the output quality. Moreover, this fragility can make real world deployment challenging, especially in user facing applications where natural language input is unpredictable.

Less Effective for Multi Modal Tasks

While prompt tuning works well with text based tasks, its effectiveness decreases when dealing with multi modal inputs or outputs requiring strict structure. Fine tuned models often outperform in these areas because they adapt their internal representations more thoroughly.

Differences Between Fine Tuning and Prompt Tuning

| Aspect | Fine Tuning | Prompt Tuning |

| Data Requirement | Requires large amounts of high quality labeled data. | Works effectively with smaller datasets. |

| Training Cost | Computationally expensive; requires more time and resources. | Cost-efficient; requires less computation and storage. |

| Model Size Impact | Results in a new version of the model for each use case. | Keeps the original model intact, only adding lightweight prompt layers. |

| Flexibility | Highly adaptable for specialized and complex tasks. | Best for narrow, well defined tasks or quick experimentation. |

| Performance | Offers superior accuracy and reliability in specialized domains. | May not match fine tuning accuracy for highly complex or nuanced tasks. |

| Scalability | Harder to scale due to multiple fine tuned versions. | Easily scalable by swapping or reusing prompts across tasks. |

| Maintenance | Requires ongoing retraining when data or domain shifts. | Easier to maintain since prompts can be updated quickly. |

When to Use Fine Tuning?

Domain Specific Applications

If your project requires expert level knowledge in a specific field, fine tuning ensures the model adapts deeply to the domain.

High Accuracy Requirements

In mission critical environments where errors can have significant consequences, fine tuned models provide more reliable results.

Large Data Availability

When you have access to a substantial volume of high quality data, fine tuning allows the model to learn complex patterns.

Complex Multi Layered Tasks

Tasks that require nuanced reasoning or multi step decision making are better handled through fine tuned models.

When to Use Prompt Tuning?

Limited Resources

If you don’t have large datasets or expensive computing infrastructure, prompt tuning offers a practical alternative.

Rapid Prototyping

When testing ideas or developing proof of concepts, prompt tuning allows you to validate performance without heavy investment.

Dynamic Use Cases

In fast changing environments, prompt tuning allows quick updates to keep responses relevant.

Scalability Across Teams

Organizations can maintain a single large model while attaching lightweight prompts for different business units or clients, making scaling more efficient.

Final Word

So, fine tuning and prompt tuning each offer unique strengths depending on your AI goals and scalability needs. Additionally, quick adjustment saves money, while fine tuning adds depth and accuracy. Additionally, by thoroughly assessing your use case, businesses may find the ideal balance to optimize AI’s potential and guarantee long alasting effects.