Spring Boot is the main framework technology used by 62% of developers polled for the Java Developer Productivity Survey. Spring Boot has become one of the most powerful frameworks for creating Java applications. It’s known for its ease of setup and microservices friendly services.

But AI has changed how businesses develop digital goods and engage with consumers. Nowadays, it serves as the basis for a variety of modern applications. This includes complex document retrieval systems to chatbots.

Now, imagine combining AI with Spring Boot. The result? Applications that are not only reliable and scalable but also capable of intelligent reasoning and personalized interactions.

So, in this guide, we will explore why AI integration with Spring Boot is essential, the role of Spring Boot in chatbot development, and how it powers RAG systems.

Why Integrate AI with Spring Boot?

Spring Boot has long been a trusted framework for building enterprise grade Java applications. Additionally, it is a preferred option for companies all over the world because to its microservices friendly architecture and speedy development of production ready apps.

Therefore, companies may develop systems that are not only scalable and dependable but also intelligent and adaptable by combining AI with Spring Boot.

Meeting Modern User Expectations

They need applications that are smart enough to understand purpose and provide context. For example, customers want personalized suggestions and the ability to ask questions in normal language, not just product lists. Also, Spring Boot applications enhanced with AI can fulfil this expectation by bridging backend systems with advanced AI capabilities.

Bridging AI Models with Enterprise Systems

Even while AI models like LLaMA and GPT are strong, they require an orchestration layer in order to communicate with business data and link to pre existing infrastructure. Spring Boot excels at this orchestration:

- In order to communicate with AI services, it might provide APIs.

- Databases and other business systems may be easily integrated with it.

- It ensures that AI generated outputs are enriched with business logic and domain context.

Scalability for AI Workloads

AI enhanced applications often face fluctuating workloads, spikes in chatbot queries or analytics heavy operations. When used with cloud platforms like Kubernetes, Spring Boot simplifies the process of scaling such workloads. In order to meet high demand, applications may dynamically scale up or down, guaranteeing reliable performance without incurring extra expenses.

Security and Governance

The protection of data privacy and governance is one of the main obstacles to the adoption of AI. AI models frequently interact with private papers, sensitive data, and consumer information. With its integrated Spring Security framework, Spring Boot offers a solid basis for implementing data security and authentication.

Cost Optimization

Training and running AI models can be resource intensive. However, with Spring Boot as the middleware, businesses gain the flexibility to choose between:

- Hosted AI APIs for quick integration.

- Self-hosted open-source models for better cost control and privacy.

- Hybrid setups where sensitive queries are handled internally while others use external APIs.

Enabling Diverse Use Cases Across Industries

The integration of AI with Spring Boot isn’t limited to a single use case, it spans across industries:

- Banking & Finance: Fraud detection, automated advisors, customer chatbots.

- Healthcare: Patient support systems, medical knowledge retrieval using RAG.

- Retail & eCommerce: Personalized recommendations, conversational shopping assistants.

- Education: AI tutors, intelligent grading systems, and content search engines.

- Enterprise Operations: Document summarization, knowledge management, and employee helpdesks.

What is the Role of Spring Boot in AI Chatbot Development?

Middleware Between Users and AI Models

Spring Boot acts as the bridge between end users and AI services. A Spring API handles the request before a consumer delivers a message to a chatbot. After verifying the input, this API sends the question to an AI model, such OpenAI’s GPT. After the AI has produced a response, Spring Boot can add business data to improve or enhance it before returning it to the user. Additionally, this process guarantees that discussions adhere to business logic in addition to being intelligent.

Multi Channel Integration for Chatbots

Modern businesses must meet customers where they are, whether on a website or platforms like Slack. Also, Spring Boot simplifies this challenge by exposing chatbot services such as RESTful APIs or through connectors that different platforms can consume. This allows organizations to build a single AI powered backend that can simultaneously serve multiple communication channels, ensuring consistent experiences without duplicating code.

Managing Conversation State and Context

AI models alone do not maintain long term memory of interactions. For meaningful chatbot experiences, state management is crucial. Additionally, by interacting with databases like Redis, Spring Boot may save user sessions and past interactions. The chatbot may be able to recall previous interactions and provide better tailored answers throughout each discussion by reusing this stored data in the AI model.

Integration with Business Logic and Data Sources

A chatbot must often go beyond providing generic answers. Businesses need their chatbots to interact with core systems. A banking chatbot could have to safely get account balances, for example. In order to ensure that AI replies are enhanced with correct and real-time business data, Spring Boot offers the necessary tools for integration with these backend services.

Reliability in Production

Systems that can manage thousands of concurrent queries while ensuring high availability are necessary for enterprises using chatbots at scale. Spring Boot adds scalability and resilience to chatbot services when used with Docker and Kubernetes. Features such as built in monitoring with Spring Boot Actuator further ensure that performance can be tracked and optimized in real time, making it suitable for mission critical chatbot applications.

How Can You Use Spring Boot With RAG Systems?

Retrieval-Augmented Generation is a powerful method that combines the strengths of information retrieval and natural language generation. By integrating Spring Boot with RAG systems, businesses can create intelligent applications that provide contextual and real time answers. Here’s how Spring Boot becomes a strong foundation for building RAG powered solutions.

Building Microservices for Retrieval

Spring Boot is excellent for building microservices, which makes it ideal for structuring the retrieval part of an RAG system. Developers can create Spring Boot services dedicated to:

- Querying vector databases.

- Indexing documents to keep the knowledge base up to date.

- Fetching contextual data from APIs or file storage.

API Layer for Orchestrating Retrieval

In a RAG system, orchestration is key. Spring Boot can provide the API layer that connects the retrieval engine and the generative model. When a user query comes in, Spring Boot handles the request and then forwards the retrieved context to the LLM for final output. Hence, this flow ensures seamless coordination without overwhelming the client with backend complexity.

Managing Data Pipelines for Knowledge Bases

RAG systems rely on high quality, up to date knowledge bases. With Spring Boot, developers can build pipelines to ingest and store data. For example:

- Scheduled jobs can fetch new documents.

- Integration with message brokers like Kafka ensures real time updates.

- REST endpoints allow easy document uploads into the knowledge base.

Security and Access Control

When dealing with sensitive knowledge bases, security is non-negotiable. Spring Boot comes with built-in support for Spring Security and API level encryption. Moreover, this ensures that only authorized users and applications can access retrieval or generation capabilities.

Scalability for High Traffic Applications

RAG applications often serve enterprise traffic, requiring systems to handle thousands of queries per second. Horizontal service scaling is made easy by Spring Boot’s support for orchestration and containerization technologies. For example, to manage spikes in requests, many retrieval microservices can operate concurrently, guaranteeing uninterrupted performance.

Monitoring

RAG workflows involve multiple moving parts, retrieves and language models. Spring Boot integrates seamlessly with monitoring tools like Prometheus and Grafna. This helps track API performance, query latency, and retrieval accuracy in real time, ensuring your system remains reliable and easy to debug.

Technical Architecture for AI Integration in Spring Boot

Client Interaction Layer

The first entry point for any AI powered Spring Boot system is the client interface. This might be a website’s chatbot user interface or an organizational dashboard. GraphQL endpoints or REST APIs are used to transmit requests from the client to the Spring Boot backend.

- Chatbots: The user types a question in natural language.

- RAG systems: The user submits a query that needs document retrieval before generating a response.

- Enterprise apps: The client triggers an action where AI enhances decision making.

API Gateway and Request Handling

Spring Boot applications often include an API gateway or dedicated controller layer to handle incoming requests. This is where validation, authentication, and routing occur. For example:

- Incoming chatbot queries are validated for syntax and session tracking.

- Queries are authenticated using Spring Security with OAuth2 or JWT.

- The API gateway routes the request to the right microservice or response generation.

Retrieval and Knowlegde Layer

For RAG systems, retrieval is crucial. Spring Boot can act as the orchestrator that connects with external knowledge bases or APIs.

- Document Ingestion Pipelines: Built with Spring Batch or Scheduler to keep the knowledge base updated.

- Vector Databases: Integration with Pinecone or Weaviate for semantic search.

- Enterprise Data Sources: Secure connections to CRMs or document storage systems.

AI Model Integration Layer

The AI model layer is where natural language understanding and generation occur. Since Spring Boot doesn’t natively run AI models, integration happens via:

- External APIs: Connecting with OpenAI or Hugging Face models through REST calls.

- On Prem Models: Using PyTorch or TensorFlow models that are set up in containers and accessible through REST APIs.

- Hybrid Setup: A mix of local and cloud-based models for performance and cost optimization.

Processing and Business Logic Layer

This layer is where Spring Boot shines. After retrieving model responses, developers can apply custom business logic:

- Post-processing responses: Cleaning or formatting AI generated text.

- Applying rules: Ensuring compliance with industry standards.

- Decision making: Triggering workflows or updating databases based on AI outputs.

Response Delivery Layer

Once the AI output is processed, it must be delivered back to the client in real time. This layer includes:

- REST/GraphQL Responses: Standard APIs returning structured results.

- WebSocket Streams: For live chatbot conversations or streaming RAG results.

- Integration Hooks: Sending AI enhanced data to third party systems like Salesforce.

Deployment Layer

For production ready AI systems, scalability is non negotiable. Spring Boot supports:

- Containerization: Using Docker to package services.

- Orchestration: Using Kubernetes to deploy microservices for AI inference and auto scaling retrieval.

- Cloud Integration: Utilizing AWS for storage and scaling infrastructure.

Best Practices for Integrating AI in Spring Boot

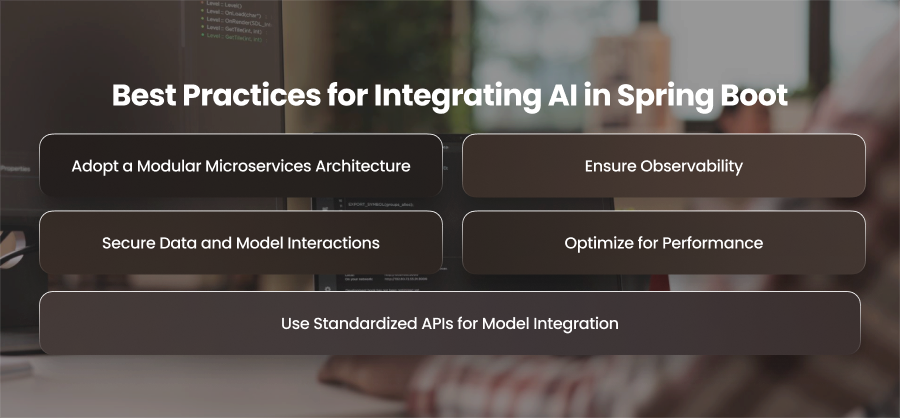

Adopt a Modular Microservices Architecture

One of the best approaches to integrating AI with Spring Boot is to use a modular microservices architecture. Instead of combining everything into a single monolithic application, AI functions such as retrieval and post processing can be separated into distinct services. For example, you might build a microservice that handles vector databases queries for document retrieval and another service for calling large language models.

Use Standardized APIs for Model Integration

Given how quickly AI models are evolving, businesses may decide to switch between OpenAI and other providers or even on-premises models. You can guarantee flexibility and prevent vendor lock in by integrating models via defined APIs rather than connecting them directly to the application. RESTful APIs or gRPC endpoints operate as abstraction layers, making it easy to modify or update AI models without requiring a full program redesign.

Secure Data and Model Interactions

Security should be the first priority when integrating AI into Spring Boot applications. This is especially true if the system handles sensitive data. Spring Security provides trustworthy authentication and encryption methods that can protect the data being collected as well as the queries being sent to AI models. To guarantee that only authorized users can use AI capabilities, role-based access control should be implemented and sensitive interactions with retrieval systems or external APIs should always be secured.

Optimize for Performance

If not effectively controlled, AI inference and retrieval activities can cause substantial delay. A smooth user experience depends on performance optimization techniques like allowing asynchronous request processing and caching commonly used responses. Spring Boot’s reactive programming model can help reduce blocking calls, making the application more responsive.

Ensure Observability

AI driven applications involve many moving parts, retrieval engines and inference models. Spring Boot integrates seamlessly with monitoring tools which allow you to track performance metrics and error rates. Also, distributing tracing with tools like Zipkin can also help identify bottlenecks across microservices.

Final Words

Integrating AI with Spring Boot provides smarter and secure applications. Spring Boot offers a versatile base for anything from enabling RAG systems to powering chatbots. Organizations may provide AI solutions that are dependable and inventive by adhering to best practices in architecture and security.