According to Data Quest, the machine learning market value is at $19.2 billion. However, it will reach $225.9 billion in less than five years. Moreover, this is because machine learning has come a long way from being a niche technology used only by data scientists. It powers recommendation systems, voice assistants, and chatbots.

Node might not be the first thing that comes to mind when we hear machine learning. However, with the growth of JavaScript ML libraries, Node is playing a growing role in bringing machine learning to web applications.

Hence, to understand how machine learning works in a Node environment, it’s essential to grasp the difference between training and inference. In this CodingCops blog, we break down the machine learning workflow in the context of Node.js.

What is Machine Learning in Node?

Machine learning is the ability of a system to learn from data and make predictions or choices without being explicitly programmed for every scenario. Additionally, it uses algorithms to find patterns in data and make complicated operations automatable.

Node can build fast and scalable applications. Additionally, the development of frameworks like as TensorFlow and ONNX in recent years has made machine learning capabilities directly available in JavaScript. Hence, this helps Node developers to use ML without leaving their tech stacks.

With Node, developers can integrate ML models into web applications and APIs. Hence, this makes it a convenient environment for deploying machine learning inference and training models.

Training in Machine Learning

Training is the foundational step of machine learning. Moreover, it’s the process where a model learns patterns from data. Firstly, labeled datasets are fed into the model. For example, an image recognition model might receive thousands of labeled images of cats and dogs.

Additionally, the model iteratively adjusts its parameters to reduce prediction errors. Also, algorithms like gradient descent help the model minimize loss functions and improve accuracy.

Moreover, this phase requires significant computational power and large datasets. Typically, training is done using powerful hardware with GPU acceleration. In Node, training is possible but is often limited to small models or experimentation due to performance constraints.

Also, libraries like TensorFlow allow for training on both the server and client sides. However, it’s not ideal for production level deep learning models. However, training lightweight models in Node can still be quite effective.

Inference in Machine Learning

Inference is the process of using a trained machine learning model to make predictions on unseen data. Moreover, it’s the execution phase of a machine learning system.

Furthermore, inference is when an ML model works in the real world. Whether it’s recommending a product or recognizing an image, inference enables applications to react intelligently and in real time to user input or new data streams.

Also, inference needs to be fast, especially in applications requiring real time responses. For instance, an AI chatbot must generate responses quickly to maintain a natural conversation flow.

Additionally, unlike training, which is computationally expensive for inference, you can use a smartphone or IoT hardware. Hence, this makes it ideal for edge computing and low-latency environments.

Moreover, inference runs at scale where millions of users request predictions from a model every day. It’s important to optimize the inference process to maintain performance under load.

Inference operates on fixed model parameters. This means that the same input will produce the same output. This is critical for consistent user experiences and reliable business logic.

Differences Between Training and Inference

| Feature | Training | Inference |

| Purpose | Model learns from data | Model makes predictions |

| Performance | Computationally expensive | Lightweight and fast |

| Frequency | Periodic | Frequent or real time |

| Hardware needs | Need A GPU | Run on CPU |

| Where it happens | Python or Server side | Client side or server |

| Use in Node | Limited use | Ideal for deployment |

Training and Inference in Node.js

Training in Node is generally suitable for lightweight models and educational purposes. Within the JavaScript environment, developers can build and train models using frameworks like as TensorFlow. Additionally, this is advantageous for ML model deployment or prototyping to edge devices in limited settings.

However, Python is the best option for more complex training jobs requiring deep neural networks or enormous datasets because of its well-established ecosystem and optimal performance.

In contrast, inference is where Node truly excels. Once a model has been trained, it can be loaded into a Node application to perform real time predictions. Moreover, Node’s non-blocking and asynchronous nature makes it perfectly effective for handling inference at scale, whether in the form of REST APIs or microservices.

Hence, this capability allows developers to deliver smart and responsive applications by integrating machine learning models directly into the backend without leaving the Node environment.

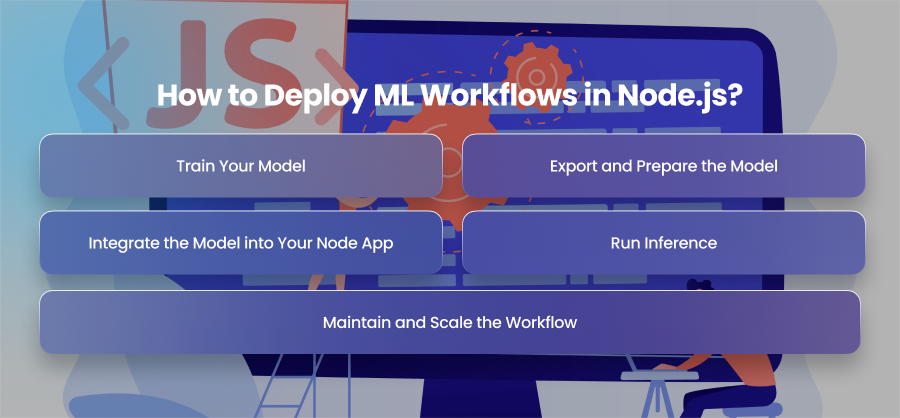

How to Deploy ML Workflows in Node.js?

Train Your Model

The first step in deploying a machine learning workflow is to train your model. While training can be done within the Node environment using libraries like TensorFlow. You can use Python libraries like PyTorch to train production level models. This is because Python libraries offer optimized performance and reliable support for large datasets and deep learning architectures.

Moreover, once training is complete, you should export the model in a format that is compatible with Node. Commonly, a model is exported in the .json format for TensorFlow or .onnx for ONNX.

Export and Prepare the Model

After the training phase, you have to export the model into a deployable format. The tensorflowjs converter tool can be used with TensorFlow to convert a Keras model into the binary weight files and .json format needed for JavaScript use.

If you are using PyTorch or TensorFlow, you will need to convert your model into the. onnx format before you can run it in Node using ONNX. Additionally, to improve the performance of your model, you might employ techniques like quantization or pruning.

Integrate the Model into Your Node App

The next step is to integrate the imported model into your Node app. This means loading the model with a compatible library and embedding it into your server side logic with a REST or a GraphQL API. Hence, this enables clients to send input data to your API. The server then processes the data using the ML model before returning predictions.

Furthermore, you can run inference within background workers or as part of serverless functions, depending on your architecture.

Run Inference

With your model integrated, your application is ready to perform inference. Moreover, when a request hits your API, the server passes the input through the model and responds with the output. As Node is asynchronous and event driven, it can handle multiple inference requests concurrently. Hence, this makes it ideal for real time applications like chatbots and fraud detection tools.

Furthermore, be sure to monitor prediction times and optimize where necessary to maintain responsive performance.

Maintain and Scale the Workflow

You have to continuously monitor model accuracy and system health. Moreover, implement logging and analytics to capture input and output pairs and user behavior for future retraining. Moreover, to scale the workflow, you can deploy multiple instances of the Node app behind a load balancer or use cloud solutions like AWS Lambda or Docker containers orchestrated with Kubernetes.

Tools and Libraries

TensorFlow.js

TensorFlow.js is the flagship library for machine learning in JavaScript. Moreover, it supports both training and inference and works in both browser and Node environments. Additionally, developers can train models from scratch or import existing ones from Python. Also, its versatility makes it ideal for both beginners and experienced developers to integrate ML seamlessly into JavaScript applications.

Brain.js

Brain is a small library made especially for problems involving pattern recognition and neural networks. Additionally, applications that require rapid and effective training and inference with smaller datasets may find it very helpful. Additionally, Brain abstracts a lot of the arithmetic, making it simpler for Node developers who are not familiar with machine learning concepts to comprehend.

ONNX.js

Models trained in frameworks such as PyTorch may be imported and executed using the ONNX format thanks to ONNX. Additionally, this is an effective solution for developers who wish to publish their models in a Node.js environment but prefer to train them in Python. Moreover, ONNX ensures interoperability without sacrificing performance.

ml5

Built on top of TensorFlow, ml5 is designed to be user friendly. Moreover, it offers a high level and approachable API that abstracts complex ML operations into simple functions. Also, it’s an excellent choice for developers who want to experiment with machine learning without getting involved in the infrastructure.

Synaptic

Synaptic is another JavaScript neural network library that’s completely library-agnostic. It gives you control over network architecture and training processes. It is useful for academic purposes or customized ML setups. Although not as actively maintained as some other options, Synaptic remains a helpful tool for learning and experimentation.

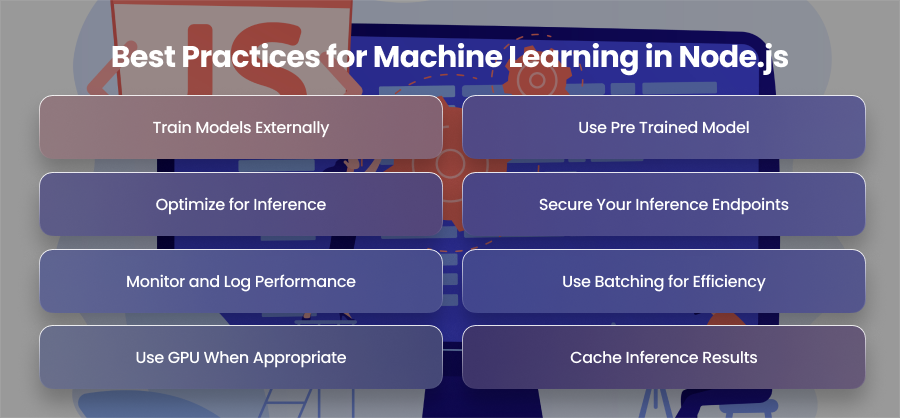

Best Practices for Machine Learning in Node.js

Train Models Externally

Node isn’t optimized for heavy computational workloads like training large models. It’s best to train your models in environments that support efficient numerical computation, typically in Python libraries. Moreover, after the model is trained, export it to a Node compatible format like .json.

Use Pre Trained Model

You can use pre-trained models from reliable sources like HuggingFace and TensorFlow Hub to expedite development and simplify it. Furthermore, these models are frequently quite efficient and need little work to modify to fit your particular use case.

Optimize for Inference

Inference performance is important, especially in real time applications. You can use optimization techniques such as quantization and model distillation to reduce size and latency without sacrificing too much accuracy.

Secure Your Inference Endpoints

Security is critical when deploying ML in production. Additionally, to guard against injection attacks and erroneous inputs, constantly verify and clean incoming data. To further prevent abuse of your ML APIs, you may utilize rate limitation and HTTPS.

Monitor and Log Performance

Another best practice is to track and monitor your inference model performance in production. Log inference times and input/output data patterns. Moreover, you should also log success rates and errors. Furthermore, you can use monitoring tools to alert you when latency spikes or accuracy drops. This can help you respond to issues before they impact your users.

Use Batching for Efficiency

When dealing with high volumes of prediction requests, you can consider batching them together. Batching can reduce overhead by processing multiple inputs in one go. This is more efficient than handling predictions one at a time. Moreover, many ML libraries support batching by default.

Use GPU When Appropriate

Even though CPUs can handle the majority of inference jobs, high throughput situations can benefit from GPU acceleration. Additionally, TensorFlow may greatly improve speed by utilizing CUDA GPUs. Applications such as image or video processing benefit greatly from this.

Cache Inference Results

If your application receives the same input data frequently, you can cache the prediction to reduce unnecessary computation and improve response times. This is especially useful for nonpersonalized or static inputs in high traffic scenarios.

Final Words

Machine learning is no longer locked into Python and R environments. Moreover, Node developers can now build smart applications that learn and adapt. Also, they can respond intelligently to user input. While Node isn’t suited for training large models, it’s an excellent platform for deploying inference workflows.